Real-Time Log Indexing - Building Lightning-Fast Search Systems

Day 56: Module 2: Scalable Log Processing | Week 8: Distributed Log Search

🎯 What We're Building Today

High-Level Agenda:

Streaming Index Construction - Process logs with <100ms latency from arrival to searchability

Memory-Efficient Segment Management - Balance performance with resource constraints through intelligent flushing

Multi-Segment Search Engine - Query across memory and disk segments with result deduplication

Real-Time Performance Dashboard - Monitor indexing metrics and search performance live

Production-Ready Architecture - Error handling, persistence, and horizontal scaling patterns

The Real-World Challenge

When Spotify processes millions of user interaction logs per second, they can't afford to wait hours for batch indexing. Engineers debugging production issues need to search logs that happened moments ago. This requires streaming indexing - the ability to make data searchable as it arrives.

Netflix's recommendation engine faces the same challenge. User clicks, views, and ratings must become searchable within milliseconds to power real-time personalization. Traditional batch indexing creates unacceptable delays between data generation and operational insights.

Core Architecture: Streaming vs Batch

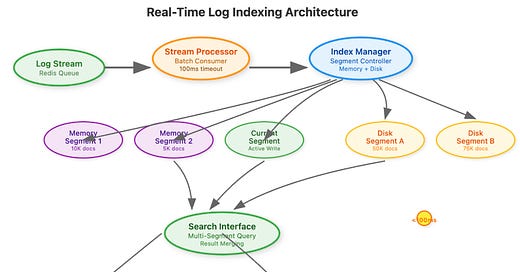

[Component Architecture Diagram]