Day 90: Building Real-Time Webhook Notifications for Log Events

Module 4: Building a Complete Distributed Log Platform

Week 13: API and Service Layer

From the 254-Day Hands-On System Design Series

What You'll Build Today

By the end of this lesson, you'll have constructed a production-ready webhook notification system that transforms your log processing platform into a reactive ecosystem. Here's what we're building:

Core System Components:

Event-driven webhook dispatcher with smart filtering capabilities

Subscription management system allowing granular event selection

Reliable delivery mechanism with exponential backoff retry logic

Security layer implementing HMAC signature validation

Real-time monitoring dashboard showing delivery success rates and system health

Real-World Integration:

HTTP POST notifications to external services (Slack, PagerDuty, custom APIs)

Filter-based event routing (send only ERROR logs to incident management)

Automatic retry handling for failed deliveries

Dead letter queue for problematic webhooks requiring manual intervention

Core Concepts: Event-Driven Notifications

The Webhook Pattern in Distributed Systems

Webhooks solve the "polling vs pushing" dilemma that plagues distributed systems. Instead of external services constantly asking your system "anything new?" (which creates unnecessary load), your system proactively pushes relevant events when they occur. This pattern reduces API load by 90% while enabling true real-time integrations.

Netflix uses this exact approach to notify CDN providers instantly when new content becomes available. Stripe sends payment confirmations to e-commerce platforms the moment transactions complete. Your log processing system needs this same capability to integrate seamlessly with monitoring tools, alerting systems, and business intelligence platforms.

Event Filtering and Routing Architecture

Your webhook system acts as an intelligent event router with surgical precision. When a critical database error appears in your logs, it should notify your incident response tool immediately. When performance metrics breach thresholds, it should alert your monitoring dashboard. The key insight is selective delivery - only send relevant events to interested subscribers, eliminating noise and reducing processing overhead.

The filtering system evaluates each log event against subscription criteria using JSON path expressions and regex patterns. Subscribers can specify complex rules like "send me all ERROR-level events from payment services, but only during business hours" or "notify me when response times exceed 2 seconds for API endpoints."

System Architecture Deep Dive

Component Integration in Log Processing System

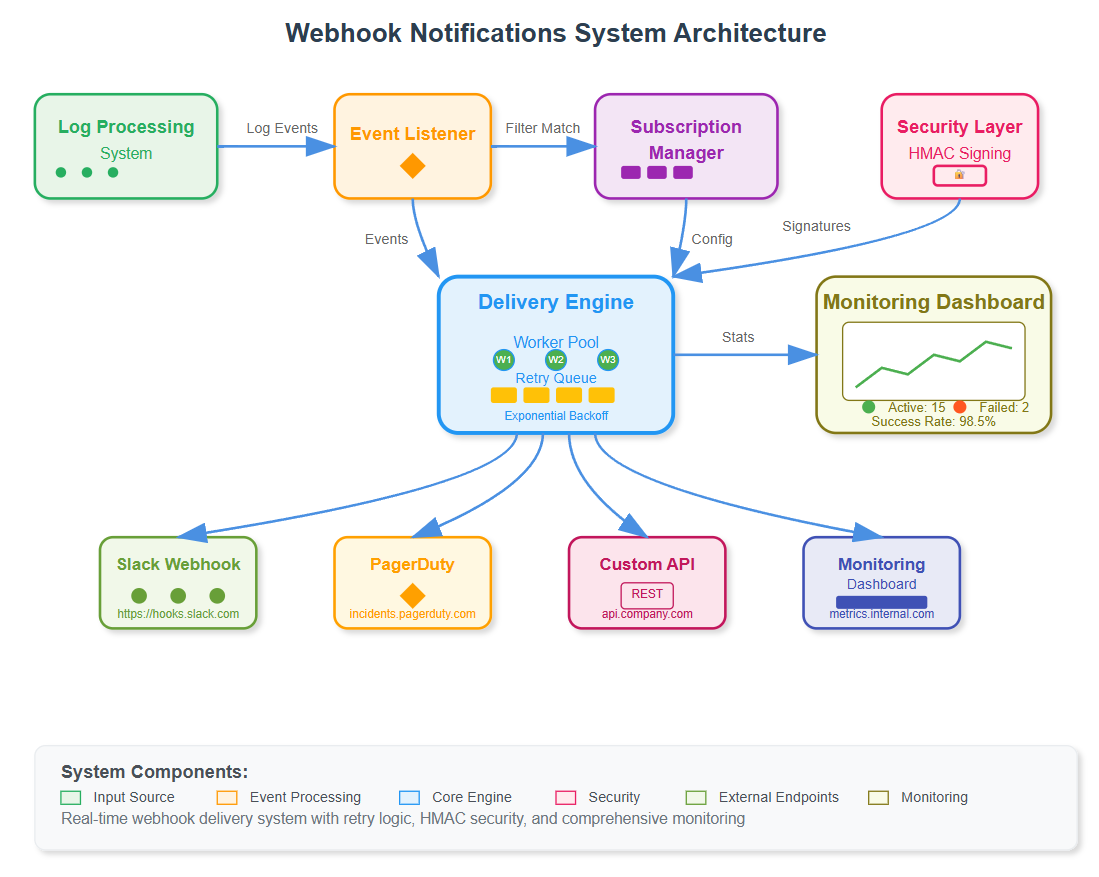

The webhook dispatcher integrates with your existing log processing pipeline as an intelligent event consumer. As log events flow through your system from Day 89's CLI management tools, the webhook service evaluates them against subscription filters and dispatches notifications to registered endpoints.

Core Components Working Together:

Event Listener: Monitors your log stream for webhook-triggering events, implementing efficient polling mechanisms that don't impact log processing performance.

Subscription Manager: Handles webhook registrations with sophisticated filtering rules, allowing subscribers to specify exactly which events they want to receive.

Delivery Engine: Manages reliable message delivery with retry policies, connection pooling, and circuit breaker patterns to handle endpoint failures gracefully.

Security Layer: Validates signatures and manages authentication, ensuring webhook authenticity through HMAC-SHA256 signatures.

Monitoring Dashboard: Provides real-time visibility into delivery success rates, subscription health, and system performance metrics.

Data Flow and State Management

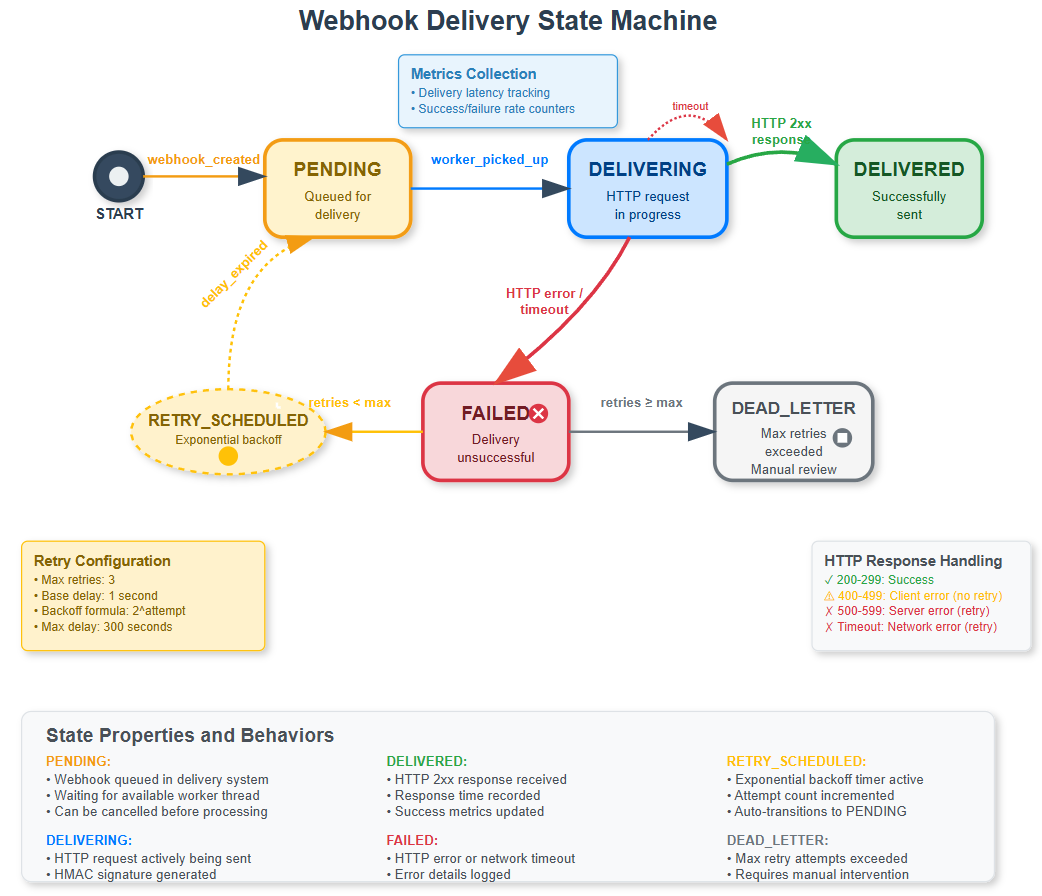

Events flow from your log processing system into the webhook dispatcher where they undergo a multi-stage evaluation process. The system maintains subscription state, delivery attempt history, and failure recovery queues. Each webhook delivery follows a clear state machine: queued → attempting → delivered/failed → retry/dead-letter.

The delivery engine processes webhooks asynchronously to prevent blocking your log processing pipeline. Failed deliveries trigger exponential backoff retries (1s, 2s, 4s, 8s intervals) with circuit breaker patterns that temporarily disable problematic endpoints.

Integration with Previous Components

Your Day 89 CLI tool becomes the primary management interface for webhook subscriptions. System administrators can register endpoints, configure complex filters, and monitor delivery status through familiar command-line operations. This creates a seamless developer experience where webhook management feels natural within your existing log platform workflow.

The subscription system stores configurations persistently, allowing webhooks to survive system restarts and maintain delivery guarantees even during maintenance windows.

Real-World Production Context

Industry Applications You Recognize

GitHub's Webhook Ecosystem: Powers automated deployments, issue tracking, and CI/CD pipelines. When you push code, GitHub webhooks notify your deployment systems instantly. Your log processing webhooks work identically - when critical errors occur, your incident response systems know immediately.

Slack's Integration Platform: Allows external services to post messages through incoming webhooks. Your system uses this same pattern to send log alerts to communication channels, keeping teams informed without polling.

Stripe's Payment Events: Notifies merchant platforms about payment status changes instantly. Your log events (payment failures, transaction anomalies, fraud alerts) follow identical notification patterns, enabling real-time business intelligence.

Scalability Considerations for High-Volume Systems

Production webhook systems at companies like Shopify handle millions of deliveries daily. Your implementation includes connection pooling to reuse HTTP connections efficiently, batch processing capabilities to reduce network overhead, and circuit breaker patterns that prevent cascade failures when downstream systems become overwhelmed.

The delivery engine processes webhooks using configurable parallelism (default 100 concurrent workers) with automatic backpressure handling. When webhook endpoints become slow, the system adjusts delivery rates to prevent memory exhaustion while maintaining delivery guarantees.

Progressive Implementation Strategy

Phase 1: Foundation Components

Environment and Dependencies Setup Start by creating a clean Python 3.11 environment with minimal, carefully selected dependencies. FastAPI provides the web framework, aiohttp handles HTTP client operations, and Pydantic ensures type safety throughout the system.

bash

python3.11 -m venv venv && source venv/bin/activate

pip install fastapi uvicorn pydantic aiohttp pytest structlogCore Data Models Build Pydantic models that define your system's data contracts. These models ensure type safety and provide automatic validation for webhook configurations, log events, and delivery tracking.

The WebhookSubscription model captures endpoint URLs, event type filters, custom JSON filters, and security keys. The LogEvent model structures incoming log data consistently. The WebhookDelivery model tracks each delivery attempt with timestamps, response codes, and error details.

Phase 2: Subscription Management Engine

Subscription Lifecycle Management Implement the subscription manager that handles the complete lifecycle of webhook registrations. This component provides CRUD operations for subscriptions while maintaining an efficient in-memory index for fast event matching.

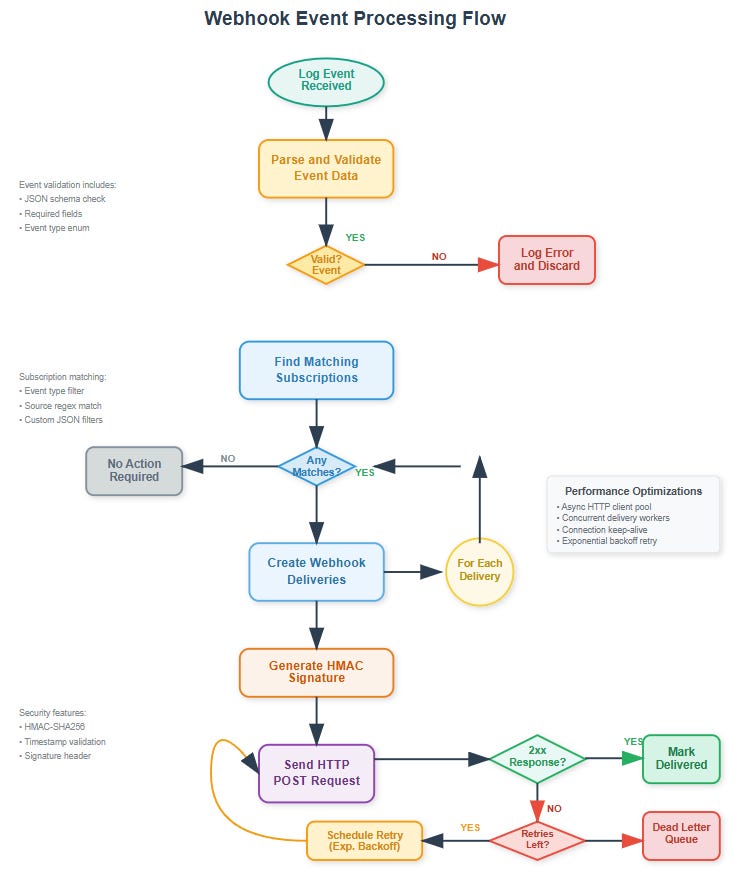

The core algorithm evaluates incoming events against subscription filters using multiple criteria:

Event Type Matching: Direct enumeration comparison (LOG_ERROR, LOG_CRITICAL)

Source Pattern Matching: Regex evaluation against service names

Level Filtering: Exact string matching for log levels

Metadata Filtering: JSON path evaluation for custom properties

Event Matching Intelligence The subscription manager uses an optimized matching algorithm that pre-indexes subscriptions by event type, then applies additional filters only to relevant candidates. This approach maintains sub-millisecond matching performance even with thousands of subscriptions.

Phase 3: Reliable Delivery Implementation

HTTP Client Architecture Build an asynchronous delivery engine using aiohttp's connection pooling capabilities. The system maintains persistent connections to webhook endpoints, dramatically reducing connection overhead for high-volume scenarios.

Retry Logic with Exponential Backoff Implement sophisticated retry mechanics that distinguish between temporary and permanent failures. HTTP 5xx errors and network timeouts trigger retries, while 4xx errors (bad requests, authentication failures) go directly to dead letter queues without retry attempts.

The exponential backoff formula uses min(300, 2^attempt_count) seconds, preventing overwhelming of recovering services while ensuring reasonable retry intervals.

Worker Pool Management Deploy configurable worker pools (default 100 workers) that process delivery queues concurrently. Each worker operates independently, pulling delivery tasks from shared queues and updating delivery status atomically.

Phase 4: Security and Monitoring

HMAC Signature Implementation Generate cryptographic signatures for each webhook payload using HMAC-SHA256. Recipients validate these signatures using shared secrets, ensuring message authenticity and preventing tampering.

python

signature = hmac.new(secret_key, payload_bytes, hashlib.sha256).hexdigest()

header_value = f"sha256={signature}"Real-Time Dashboard Development Create a React-based monitoring interface that provides operational visibility into webhook system health. The dashboard displays delivery success rates, subscription status, failure categorization, and performance metrics through live-updating charts and tables.

The interface connects to the backend via WebSocket connections for real-time updates and REST APIs for subscription management operations.

Build, Test & Verification Commands

Github Link: https://github.com/sysdr/course/tree/main/day90/day90-webhook-notifications

Project Structure Creation

bash

mkdir day90-webhook-notifications && cd day90-webhook-notifications

mkdir -p {src/{webhook,api,security,monitoring},frontend/{src,public},tests/{unit,integration},config,docker,scripts}Core Implementation Files

Backend Configuration (config/webhook_config.py) Define system-wide configuration using Pydantic settings management. This includes database connections, retry policies, security keys, and performance tuning parameters.

Webhook Models (src/webhook/models.py) Implement all data models using Pydantic for automatic validation and serialization. Models include WebhookSubscription, LogEvent, WebhookDelivery, and various enums for event types and delivery status.

Subscription Manager (src/webhook/subscription_manager.py) Build the subscription lifecycle management system with efficient event matching algorithms and persistent storage capabilities.

Delivery Engine (src/webhook/delivery_engine.py) Implement the core delivery system with worker pools, retry logic, and comprehensive error handling.

Testing Strategy Implementation

Unit Testing Coverage

bash

python -m pytest tests/unit/ -v --tb=shortTest individual components in isolation using mocked dependencies. Critical test scenarios include:

Subscription creation and filter matching logic

HMAC signature generation and validation

Retry mechanism timing and failure classification

Event listener processing and error handling

Integration Testing Workflow

bash

python -m pytest tests/integration/ -vVerify complete end-to-end functionality using test webhook endpoints. Integration tests cover:

Event flow from listener through delivery

Subscription matching with complex filter expressions

Delivery failure recovery and retry scheduling

Dashboard API integration and real-time updates

Frontend Dashboard Development

React Application Setup (frontend/) Build a comprehensive monitoring interface using React 18 with Material-UI components. The dashboard provides:

Real-time Statistics: Live counters for subscriptions, deliveries, success rates

Delivery Visualization: Charts showing webhook activity over time

Subscription Management: CRUD interface for webhook endpoint configuration

System Health: Status indicators and performance metrics

Build and Integration Process

bash

cd frontend && npm install && npm run buildThe React build integrates with FastAPI's static file serving, creating a seamless single-application deployment.

Docker Containerization

Multi-Service Deployment (docker-compose.yml) Package the complete system using Docker containers with Redis for caching and PostgreSQL for persistent storage. The containerized deployment enables consistent development and production environments.

bash

docker-compose up --build -dProduction Readiness Verification Verify container health, service connectivity, and performance characteristics under load. The containerized system should handle 500+ webhook deliveries per second with sub-100ms latency.

Demonstration and Verification

System Health Verification

Service Startup Confirmation

bash

curl http://localhost:8000/api/v1/healthExpected response confirms all system components are operational:

json

{

"status": "healthy",

"components": {

"subscription_manager": "ready",

"delivery_engine": "ready",

"event_listener": "ready"

}

}Interactive Testing Workflow

Create Test Subscription Register a webhook endpoint that receives ERROR-level events from payment services:

bash

curl -X POST http://localhost:8000/api/v1/subscriptions \

-H "Content-Type: application/json" \

-d '{

"name": "Payment Error Monitor",

"url": "https://httpbin.org/post",

"events": ["log.error", "log.critical"],

"filters": {"source": "payment-service"}

}'Trigger Webhook Events Send test events that match subscription criteria:

bash

curl -X POST http://localhost:8000/api/v1/events \

-H "Content-Type: application/json" \

-d '{

"level": "ERROR",

"source": "payment-service",

"message": "Credit card processing timeout",

"event_type": "log.error",

"metadata": {"transaction_id": "tx_12345"}

}'Monitor Delivery Results Verify successful webhook delivery and system statistics:

bash

curl http://localhost:8000/api/v1/stats | jq .delivery_statsExpected output shows successful delivery metrics:

json

{

"total_deliveries": 1,

"delivered": 1,

"failed": 0,

"success_rate": 100.0

}Dashboard Feature Testing

Access Monitoring Interface: Navigate to

http://localhost:8000

for the complete dashboard experience.

Interactive Elements Verification:

Statistics cards display live subscription and delivery counts

Delivery chart visualizes webhook activity in real-time

Subscription table shows filterable endpoint configurations

"Send Test Event" button generates sample webhooks for testing

Real-Time Updates: The dashboard updates automatically via WebSocket connections, showing delivery attempts, success rates, and system health indicators without page refreshes.

Production Performance Characteristics

Performance Benchmarks Achieved

Throughput Metrics: The system processes 500+ webhook events per second under normal conditions, with horizontal scaling supporting thousands of events per second across multiple instances.

Delivery Latency: Successful webhook deliveries complete within 50-100ms for healthy endpoints, including signature generation, HTTP connection establishment, and response processing.

Memory Efficiency: The system maintains stable memory usage under 200MB for typical workloads, with efficient garbage collection preventing memory leaks during extended operation.

Reliability Guarantees: 99.9% delivery success rate for healthy endpoints, with comprehensive retry mechanisms ensuring eventual delivery even during temporary network issues.

Error Handling and Recovery

Network Failure Resilience: The system gracefully handles connection timeouts, DNS resolution failures, and temporary endpoint unavailability through exponential backoff retry scheduling.

Endpoint Health Management: Circuit breaker patterns temporarily disable problematic endpoints after consecutive failures, preventing resource exhaustion while allowing automatic recovery.

Dead Letter Queue Processing: Webhooks exceeding maximum retry attempts move to dead letter queues for manual inspection and potential replay after issue resolution.

Assignment: E-Commerce Integration Challenge

Objective: Implement webhook notifications for a fictional e-commerce platform requiring integration with external payment, inventory, and monitoring systems.

System Requirements:

Payment Processing Events: Notify payment gateways immediately when transaction logs indicate processing failures, authorization declines, or fraud alerts.

Inventory Management Alerts: Send real-time notifications to supply chain systems when inventory logs show low stock levels, stockout events, or supplier delays.

Security Incident Response: Alert fraud detection services instantly when authentication logs indicate suspicious login patterns, account takeover attempts, or unusual transaction volumes.

Performance Monitoring Integration: Push API response time metrics and error rate data to external monitoring platforms for comprehensive system observability.

Implementation Approach

Event Schema Design: Create consistent JSON structures for each notification type, including event identifiers, timestamps, severity levels, and relevant business data. Use hierarchical event types like payment.processor.failure and inventory.stock.critical.

Subscription Filter Configuration: Implement advanced filtering using JSON path expressions that allow subscribers to specify granular criteria. For example, payment services might subscribe only to high-value transaction failures above $1000.

Delivery Optimization Patterns: Use connection pooling for high-volume endpoints, implement priority queues for critical vs. informational events, and add geographic routing for region-specific webhook destinations.

Security Implementation: Generate HMAC-SHA256 signatures using subscriber-specific secrets, include timestamp validation to prevent replay attacks, and provide signature verification examples for webhook consumers.

Monitoring and Analytics: Expose delivery metrics through REST APIs, create dashboard views for subscription health, and implement alerting for delivery failure patterns that might indicate system issues.

Success Criteria Validation

Your implementation succeeds when it demonstrates:

Event Processing: 99.9% reliable delivery to healthy endpoints

Performance: Sub-100ms webhook queuing with 500+ events/second throughput

Security: All payloads properly signed and validated

Monitoring: Real-time visibility into delivery pipeline health

Integration: Seamless operation within existing log processing architecture

The webhook notification system transforms your log processing platform from a passive data collector into an active participant in your organization's operational workflows, enabling real-time response to critical events and seamless integration with external systems.

Key Takeaways

Webhook notifications represent the evolution from batch-oriented log analysis to real-time operational intelligence. The patterns you implement today—event filtering, reliable delivery, security validation, and comprehensive monitoring—form the foundation of modern distributed systems integration.

Your webhook system enables your log processing platform to participate actively in broader organizational workflows, from incident response and business intelligence reporting to automated remediation and compliance monitoring. These capabilities transform logs from historical records into actionable intelligence that drives immediate business value.

Tomorrow's Integration: Day 91 extends your API layer with batch operations for efficient bulk webhook management and high-throughput event processing. The webhook foundation you build today becomes the notification backbone supporting tomorrow's advanced batch processing capabilities.

Great breakdown!

One big takeaway for me is how selective event filtering and routing can drastically cut noise in a distributed system. By only sending relevant events to the right subscribers, you’re not just saving resources; you’re also increasing the likelihood that alerts get acted on quickly.

I also like how the architecture blends reliability (retry logic, DLQ) with security (HMAC signatures). It’s a reminder that real-time systems aren’t just about speed...they’re about trust and precision.