Day 31: RabbitMQ Message Queue for Distributed Log Processing

What We’re Building Today

Today we implement a production-grade message queue infrastructure using RabbitMQ for distributed log processing:

Multi-exchange RabbitMQ topology with topic-based routing for different log severity levels

Spring AMQP integration with automatic message serialization and connection pooling

Durable message persistence ensuring zero log loss during consumer failures

Dead letter queues (DLQ) for failed message handling and retry mechanisms

Monitoring infrastructure tracking queue depth, consumer throughput, and message latencies

Why This Matters: The Message Queue Pattern in Production Systems

When Uber processes 100 million rides daily, each ride generates thousands of events: GPS coordinates, driver state changes, payment events, and customer interactions. These events can’t be processed synchronously—a single slow database write would cascade through the entire system. Instead, Uber uses message queues to decouple event production from consumption, allowing services to scale independently.

Message queues solve three critical distributed system challenges: temporal decoupling (producers and consumers don’t need to be available simultaneously), rate limiting (queues absorb traffic spikes without overwhelming consumers), and load distribution (multiple consumers can process messages in parallel). Netflix processes 8 billion events daily through message queues, with queues acting as shock absorbers between their microservices.

The choice between RabbitMQ and Kafka isn’t binary—they serve different patterns. RabbitMQ excels at complex routing, priority queues, and request-reply patterns with sub-10ms latencies. Kafka dominates event streaming and replay scenarios. Today’s lesson demonstrates RabbitMQ’s strength in flexible message routing and guaranteed delivery semantics.

System Architecture Overview

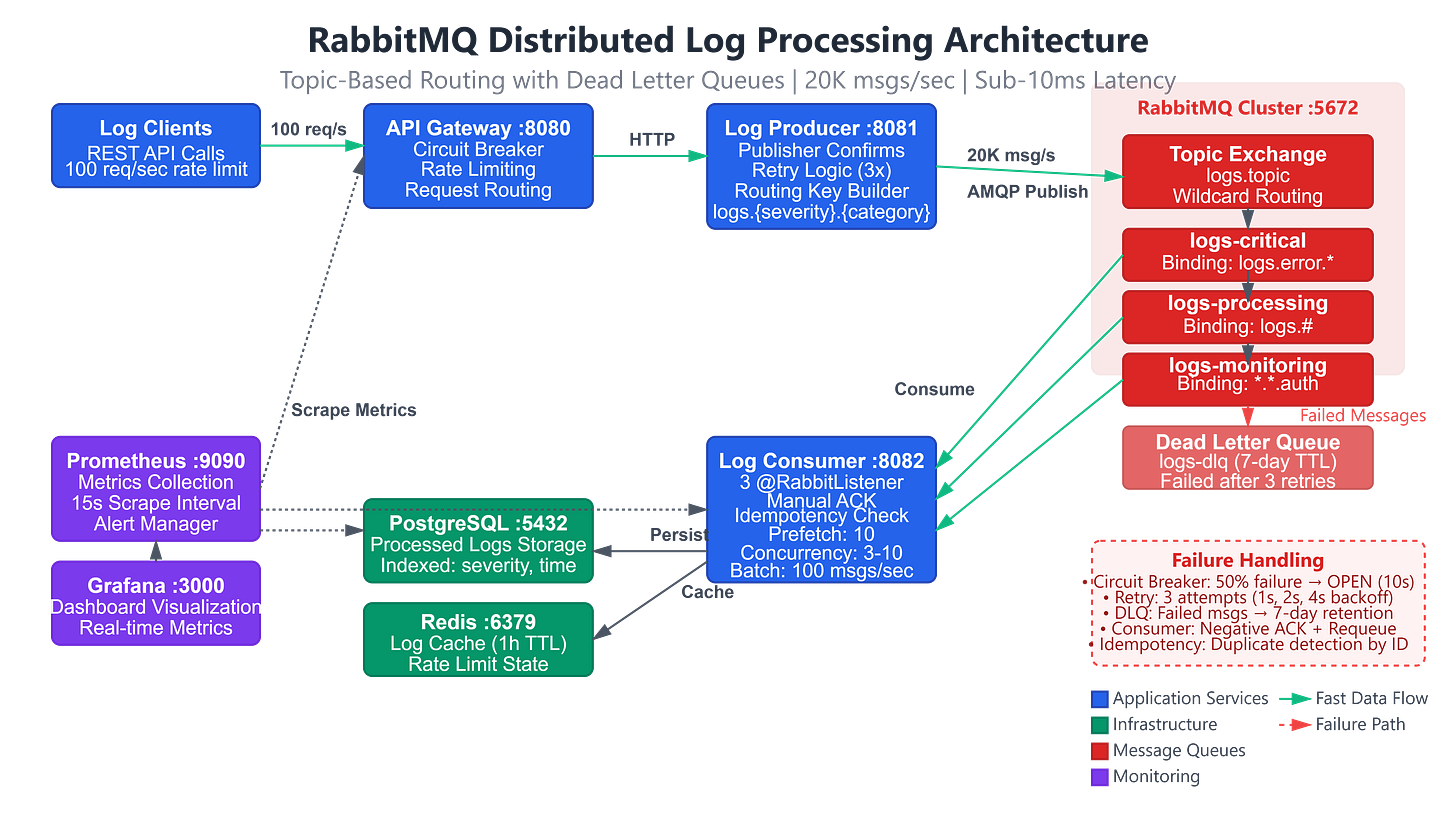

The diagram above shows our complete system with:

API Gateway receiving client requests

Log Producer publishing to RabbitMQ

Topic Exchange routing to three queues

Log Consumer processing messages

PostgreSQL and Redis for storage

Prometheus and Grafana for monitoring