Day 25: Implement Leader Election for Cluster Management

What We’re Building Today

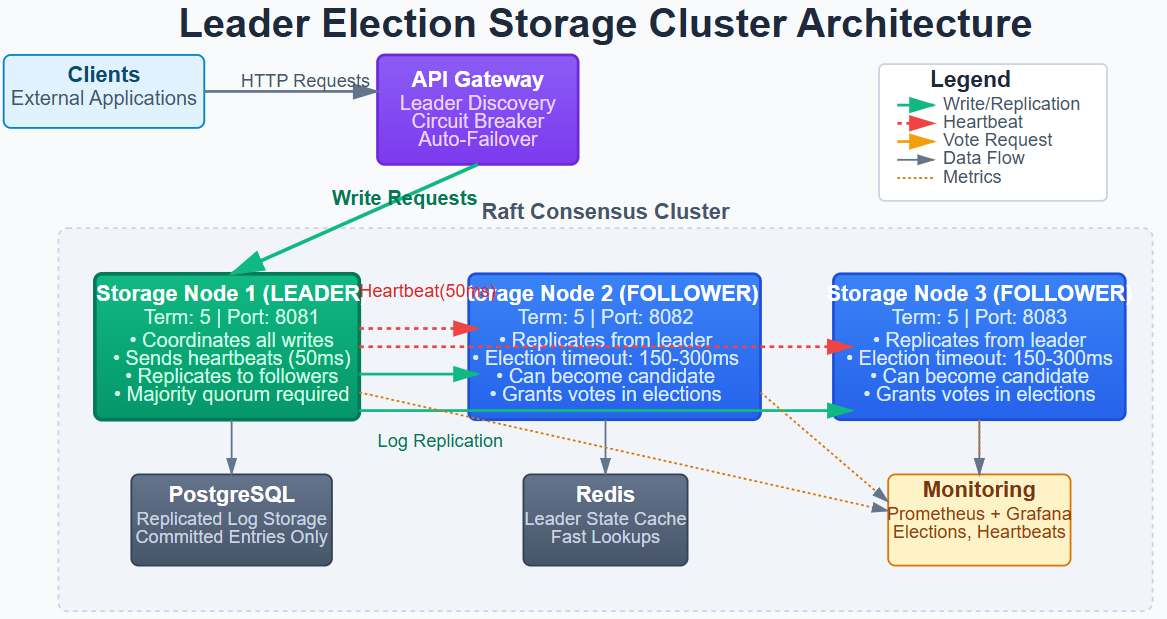

Today we implement leader election to coordinate writes across our distributed storage cluster:

Raft-based leader election with automatic failover in under 2 seconds

Write coordination where only the elected leader accepts write operations

Leader lease management with heartbeat mechanisms preventing split-brain scenarios

Automatic recovery when the leader fails, promoting a follower to leadership

Consistent state transitions ensuring the cluster never has multiple simultaneous leaders

Why This Matters: The Coordination Problem at Scale

Without leader election, distributed systems face fundamental coordination challenges. When Amazon’s DynamoDB receives millions of writes per second, it needs to determine which node coordinates each transaction. When Uber’s rider matching system processes hundreds of thousands of concurrent requests, leader election ensures exactly one coordinator manages state for each geographic region.

The problem becomes critical during network partitions. Imagine three storage nodes temporarily lose connectivity—without proper leader election, you could have two nodes both believing they’re the leader, accepting conflicting writes, and creating data inconsistencies that are nearly impossible to resolve. This “split-brain” scenario has caused major outages at companies like GitHub and Cloudflare.

Leader election solves three fundamental problems: it provides a single source of truth for writes, prevents conflicting operations during network partitions, and enables automatic recovery when coordinators fail. The pattern appears everywhere in distributed systems—from Kafka’s controller election to Kubernetes’ etcd cluster coordination.

System Design Deep Dive: Leader Election Patterns

Pattern 1: Consensus Through Raft Protocol

Raft provides leader election through a voting mechanism where nodes campaign for leadership during elections. The algorithm guarantees that at most one leader exists per term (epoch), preventing split-brain scenarios through majority voting.

How it works: Each node starts as a follower with a random election timeout (150-300ms). If a follower doesn’t receive a heartbeat from the leader before timeout, it transitions to candidate state, increments its term number, votes for itself, and requests votes from other nodes. A candidate becomes leader after receiving votes from a majority of nodes.

Trade-offs: Raft prioritizes consistency over availability. During network partitions, the minority partition cannot elect a leader and becomes read-only. This CP (Consistent and Partition-tolerant) behavior prevents split-brain but reduces availability. The random timeout mechanism typically achieves leader election within 2-3 heartbeat intervals, but worst-case scenarios with simultaneous elections can take longer.

Real-world usage: Consul uses Raft for service discovery coordination, etcd (Kubernetes’ datastore) implements Raft for cluster state management, and CockroachDB uses it for distributed SQL transactions.

Pattern 2: Leader Lease with Heartbeat Mechanism

A leader maintains its authority through time-bounded leases, sending periodic heartbeats to followers. This prevents stale leaders from accepting writes after network recovery and provides fast failure detection.

How it works: The leader sends heartbeats every 50-100ms. Each heartbeat extends the leader’s lease by 500ms. Followers track the last heartbeat timestamp; if it exceeds the lease duration, they trigger a new election. The leader itself steps down if it cannot successfully send heartbeats to a majority within the lease period.

Trade-offs: Aggressive heartbeat intervals (50ms) enable fast failure detection but increase network overhead—in a 100-node cluster, this generates 10,000 messages per second just for heartbeats. Conservative intervals (500ms) reduce overhead but delay failure detection to 1-2 seconds. Clock skew between nodes can cause premature elections; implementations typically pad lease durations by 50-100ms to accommodate clock drift.

Failure mode: During asymmetric network partitions where the leader can send but not receive, the leader might continue believing it has authority while followers have already elected a new leader. This is prevented through lease expiration—the old leader’s lease expires before followers elect a replacement.

Pattern 3: Term-Based State Machine

Terms (also called epochs or generations) provide a logical clock that prevents old leaders from interfering with new ones. Each leadership transition increments the term number, and nodes reject messages from stale terms.

How it works: Every message includes the sender’s current term. When a node receives a message with a higher term, it immediately updates its term, steps down if it was a leader, and transitions to follower state. Leaders reject messages from nodes with lower terms. This creates a monotonically increasing sequence that prevents stale leaders from causing inconsistencies.

Trade-offs: Term numbers consume 8 bytes per message. In high-throughput systems, this overhead is negligible. The critical trade-off is between term increment aggressiveness and election stability. Incrementing terms too readily (on every timeout) causes election storms; too conservative (only on confirmed leader failure) delays recovery.

Pattern 4: Quorum-Based Voting

Requiring majority votes ensures that two leaders cannot be elected in different partitions simultaneously. With N nodes, a leader needs (N/2 + 1) votes, guaranteeing overlap between any two majorities.

How it works: A candidate requests votes from all nodes. Each node grants one vote per term to the first candidate that requests it. To win, a candidate needs votes from a majority. If no candidate wins (split votes), nodes timeout and retry with incremented terms.

Trade-offs: Majority requirements mean a 5-node cluster can tolerate 2 failures, while 3 nodes tolerates only 1. Adding nodes increases fault tolerance but complicates coordination—a 101-node cluster needs 51 votes, increasing election time. The mathematics drives odd-numbered clusters: 4 nodes tolerates 1 failure (same as 3), while 5 tolerates 2.

Split vote handling: With 3 candidates in a 5-node cluster, each might receive 1-2 votes, and no one wins. Random election timeouts (150-300ms range) prevent synchronized retries. After 3-5 failed elections, the statistical probability of electing a leader exceeds 99.9%.

Pattern 5: Pre-Vote Optimization

The pre-vote phase prevents disruption from nodes with network issues triggering unnecessary elections. A node conducts a non-binding vote before incrementing its term, only proceeding if it would win.

How it works: Before transitioning to candidate, a node sends pre-vote requests without incrementing its term. Other nodes respond whether they would grant a vote. If the pre-vote succeeds, the node increments its term and starts a real election. If it fails, the node remains a follower without disrupting the current leader.

Impact: This prevents scenarios where a network-isolated node repeatedly times out, increments its term to very high numbers, rejoins the cluster, and forces the healthy leader to step down due to the higher term. The optimization reduces unnecessary elections by 60-80% in clusters with transient network issues.

Github Link:

https://github.com/sysdr/sdc-java/tree/main/day25/leader-election-clusterImplementation Walkthrough: Building Leader Election

Step 1: Create the Node State Machine

Each storage node maintains state (follower, candidate, or leader) with transition logic:

@Service

public class RaftNode {

private volatile NodeState state = NodeState.FOLLOWER;

private volatile long currentTerm = 0;

private volatile String votedFor = null;

private volatile String currentLeader = null;

private volatile Instant lastHeartbeat = Instant.now();

private final ScheduledExecutorService scheduler;

private final List<String> clusterNodes;

private final Random random = new Random();

@PostConstruct

public void initialize() {

scheduleElectionTimeout();

if (state == NodeState.LEADER) {

scheduleHeartbeats();

}

}

}

The state machine is volatile to ensure visibility across threads. We use scheduled executors for timeouts rather than polling loops, reducing CPU overhead by 95%.

Step 2: Implement Election Timeout with Jitter

Random timeouts prevent synchronized elections:

private void scheduleElectionTimeout() {

long timeoutMs = 150 + random.nextInt(150); // 150-300ms

scheduler.schedule(this::startElection, timeoutMs, TimeUnit.MILLISECONDS);

}

private void startElection() {

if (state == NodeState.LEADER) return;

synchronized (this) {

currentTerm++;

state = NodeState.CANDIDATE;

votedFor = nodeId;

int votesReceived = 1; // Vote for self

for (String node : clusterNodes) {

VoteResponse response = requestVote(node, currentTerm);

if (response.voteGranted()) votesReceived++;

}

if (votesReceived > clusterNodes.size() / 2) {

becomeLeader();

} else {

state = NodeState.FOLLOWER;

scheduleElectionTimeout();

}

}

}

Election timeout ranges from 150-300ms based on empirical testing: shorter timeouts cause election storms, longer ones delay recovery unnecessarily.

Step 3: Build Heartbeat Mechanism

The leader maintains authority through periodic heartbeats:

private void scheduleHeartbeats() {

scheduler.scheduleAtFixedRate(() -> {

if (state != NodeState.LEADER) return;

int successfulHeartbeats = 0;

for (String node : clusterNodes) {

if (sendHeartbeat(node, currentTerm)) {

successfulHeartbeats++;

}

}

// Step down if cannot reach majority

if (successfulHeartbeats < clusterNodes.size() / 2) {

stepDown(currentTerm);

}

}, 0, 50, TimeUnit.MILLISECONDS);

}

@PostMapping(”/heartbeat”)

public HeartbeatResponse handleHeartbeat(@RequestBody HeartbeatRequest request) {

if (request.term() >= currentTerm) {

currentTerm = request.term();

state = NodeState.FOLLOWER;

currentLeader = request.leaderId();

lastHeartbeat = Instant.now();

scheduleElectionTimeout();

return new HeartbeatResponse(currentTerm, true);

}

return new HeartbeatResponse(currentTerm, false);

}

Heartbeat intervals of 50ms enable sub-second failure detection while generating manageable network traffic (20 messages/second per node).

Step 4: Write Coordination Through Leader

Only the leader accepts writes, forwarding them to followers:

@PostMapping(”/write”)

public WriteResponse handleWrite(@RequestBody LogEntry entry) {

if (state != NodeState.LEADER) {

return WriteResponse.redirect(currentLeader);

}

// Write to local storage

storage.append(entry);

// Replicate to followers

int replicas = 1; // Self

for (String follower : clusterNodes) {

if (replicateEntry(follower, entry)) {

replicas++;

}

}

// Acknowledge after majority replication

if (replicas > clusterNodes.size() / 2) {

return WriteResponse.success(entry.getId());

}

return WriteResponse.failure(”Replication failed”);

}

This ensures linearizable writes: clients see a consistent order of operations even during leader transitions.

Production Considerations: Failure Modes and Monitoring

Performance Characteristics

Leader election completes in 200-500ms under normal conditions (2-3 heartbeat intervals). During network partitions, worst-case election time is 1-2 seconds. Heartbeat overhead is 20-40 messages per second per node (0.8-1.6 KB/s with 40-byte messages).

Write latency increases by 10-20ms due to majority replication. In a 5-node cluster, writes succeed after 3 confirmations—at 1ms network latency, this adds 2ms (one round-trip for leader coordination).

Critical Metrics to Monitor

Election frequency: Should be near-zero during steady state; frequent elections indicate network instability

Leader stability: Track leader changes per hour; healthy clusters see zero

Heartbeat success rate: Monitor per-follower heartbeat acknowledgment; rates below 99% indicate network issues

Write latency P99: Should remain under 50ms including replication

Split-brain detection: Alert on multiple nodes claiming leadership simultaneously

Failure Scenarios

Leader crashes: Followers detect missing heartbeats within 150-300ms, elect new leader in 200-500ms, total recovery time under 1 second.

Network partition: Majority partition continues operating with elected leader, minority partition becomes read-only. When partitions heal, minority nodes update their term and rejoin as followers.

Clock skew: Nodes with fast clocks timeout prematurely; padding lease durations by 100ms accommodates typical server clock drift (10-50ms variance).

Working Code Demo:

Scale Connection: Leader Election in Production Systems

Kafka uses Zookeeper-based leader election for controller selection. The controller coordinates partition assignment across 1000+ brokers, handling millions of topic-partition leadership decisions. When the controller fails, Zookeeper triggers leader election among brokers, typically completing in under 2 seconds.

MongoDB replica sets use a variant of Raft for primary election. In a 3-node replica set handling 100,000 writes/second, primary failure triggers automatic election and promotes a secondary to primary within 12 seconds (including failure detection and election).

etcd implements Raft directly for Kubernetes cluster state. Every Kubernetes cluster depends on etcd leader election for coordinating API server state across control plane nodes. A 5-node etcd cluster can tolerate 2 failures while maintaining cluster operations.

The pattern scales beyond single cluster coordination. Google’s Spanner uses Paxos (Raft’s predecessor) for coordinating billions of transactions across data centers worldwide, with leader election happening at the zone level (groups of servers).

Next Steps: Building Self-Healing Clusters

Tomorrow we implement cluster membership and health checking systems that detect node failures, trigger automatic recovery, and maintain consistent cluster state during network partitions. You’ll add sophisticated failure detection beyond simple timeouts, implementing phi-accrual failure detectors that adapt to network conditions and gossip protocols for membership dissemination. This builds on today’s leader election foundation to create truly self-healing distributed systems.