Day 21: Log Enrichment Pipeline - Adding Intelligence to Raw Events

What We’re Building Today

Today we implement a production-grade log enrichment service that transforms raw log events into intelligent, queryable records by adding contextual metadata:

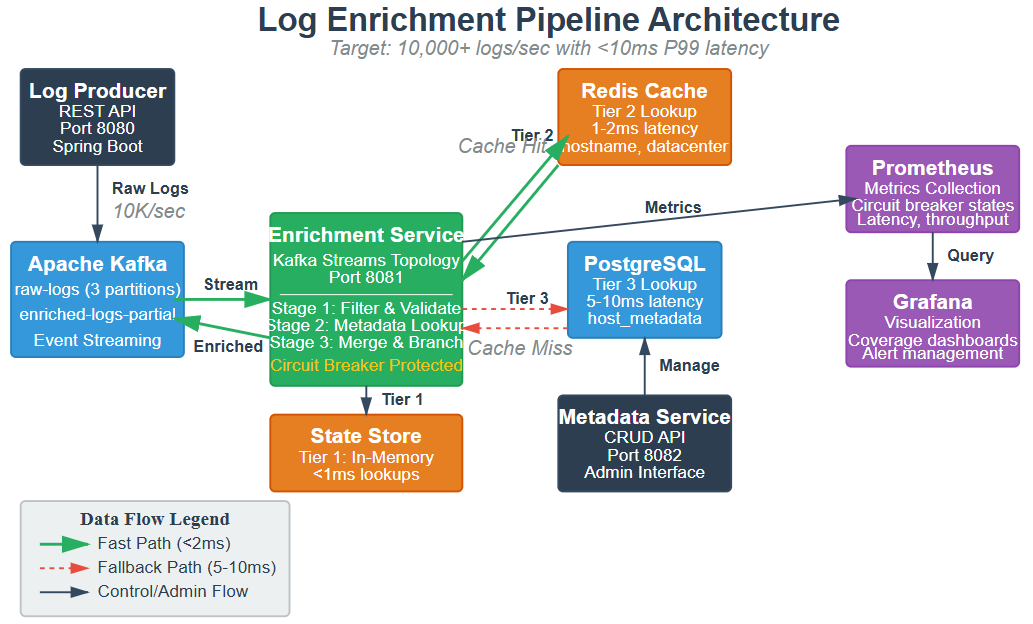

Distributed enrichment service processing 10,000+ logs/second with Spring Boot and Kafka Streams

Multi-source metadata resolver pulling context from Redis cache, PostgreSQL, and external APIs

Schema-aware enrichment that preserves compatibility with Day 20’s normalized formats

Asynchronous enrichment pipeline with circuit breakers and fallback strategies

Observable enrichment metrics tracking success rates, latency, and enrichment coverage

Why This Matters: From Noise to Intelligence

Raw logs tell you what happened. Enriched logs tell you why it matters.

When Uber processes 100 billion events daily, raw logs show “request failed with 503”. Enriched logs reveal: “Payment service in us-east-1 experiencing cascading failures during peak demand, affecting 2.3% of riders in the Manhattan zone, correlated with database connection pool exhaustion.” This transformation happens through enrichment pipelines.

Netflix’s enrichment layer adds viewer demographics, A/B test cohorts, device capabilities, and network conditions to every playback event. This metadata powers their recommendation engine, capacity planning, and real-time quality optimization. Without enrichment, logs are just timestamps and error codes. With enrichment, they become the foundation for machine learning, anomaly detection, and business intelligence.

The challenge at scale: enrichment must be fast (sub-10ms), reliable (never drop logs), and cost-effective (don’t overload metadata sources). Today we solve this with caching strategies, circuit breaker patterns, and degradation modes that maintain service quality even when enrichment sources fail.