Day 17: Create Avro serialization support for schema evolution

Building Future-Proof Data with Avro Schema Evolution Part of the 254-Day Hands-On System Design Series

Hey future system architects! 👋

Remember when you upgraded your phone's operating system and all your apps still worked? That's schema evolution in action! Today we're diving into Apache Avro, the serialization format that makes this magic possible in distributed systems.

The Evolution Challenge: A Restaurant Menu Analogy

Imagine you own a chain of restaurants. Your headquarters sends daily menu updates to all locations via a messaging system. Now picture this nightmare scenario: you add a new "spice level" field to menu items, but half your restaurants can't read the new format and their systems crash during dinner rush!

This is exactly what happens in distributed systems when different services run different versions of your code. Avro solves this with schema evolution – the ability to change your data format without breaking existing systems.

Why Avro Matters in Distributed Log Processing

In our distributed log processing system, we're building something like what powers Netflix's recommendation engine or Uber's real-time pricing. These systems process millions of events per second, and they can't afford downtime when you need to add new fields to track user behavior or pricing metrics.

Avro sits at the heart of systems like Apache Kafka and LinkedIn's data infrastructure. While Protocol Buffers (from Day 16) excel at point-to-point communication, Avro shines in data-heavy scenarios where schema changes are frequent and backward compatibility is non-negotiable.

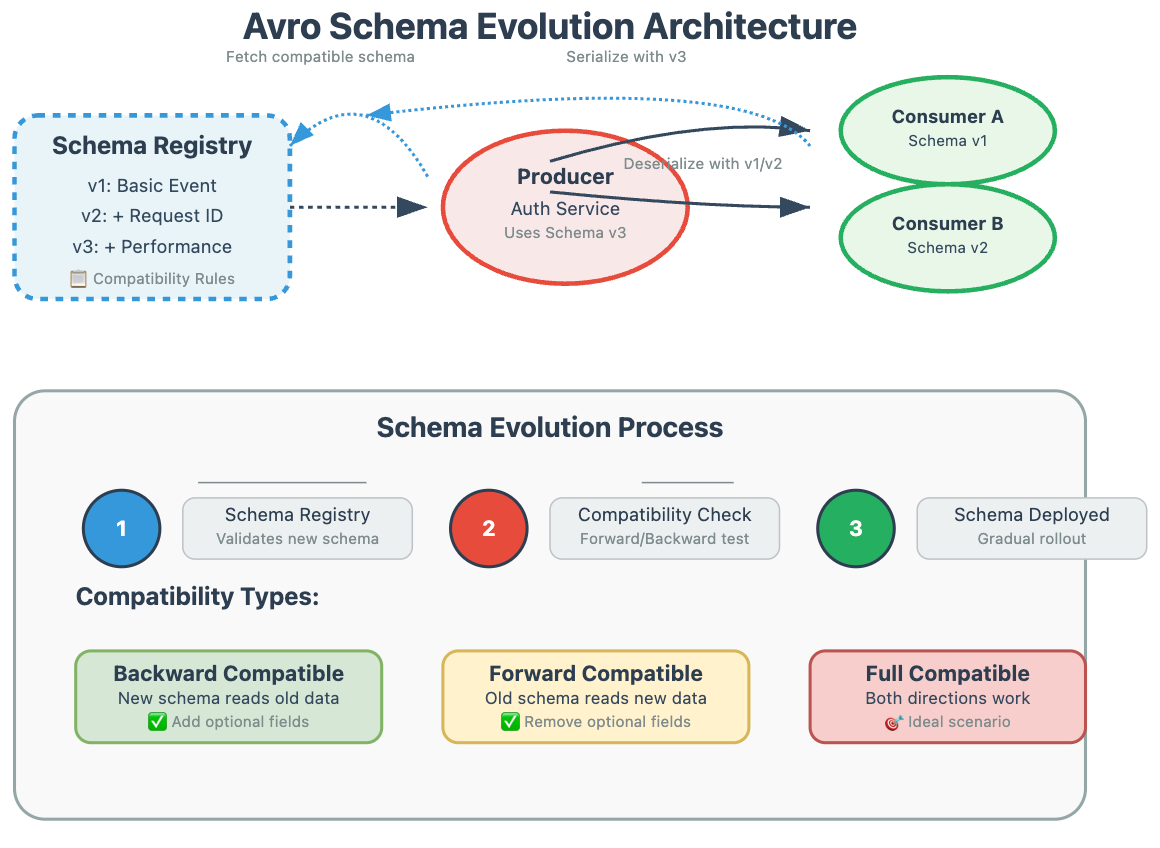

Core Architecture: The Schema Evolution Machine

Think of Avro as a universal translator that comes with a detailed instruction manual (the schema). When you need to change the translation rules, you publish a new manual version, but the old translators can still understand messages using compatibility rules.

Today's Implementation: Building an Evolvable Log System

Tangible Outcome: By day's end, you'll have a working distributed log system that can handle schema changes gracefully, demonstrating forward and backward compatibility – a skill that senior engineers at major tech companies consider essential.

Source Code - https://github.com/sysdr/course/tree/main/day17

Project Structure Setup

Let's build our schema-evolvable log processing system:

# Create project structure

mkdir avro-log-system && cd avro-log-system

mkdir -p src/{serializers,models,tests,schemas,web}

mkdir -p config docker scripts

Core Implementation Files

src/models/log_event.py - Our evolving data model:

src/serializers/avro_handler.py - The schema evolution engine:

Schema Evolution in Action

Our schemas demonstrate the three types of evolution:

Backward Compatible: New fields with defaults

Forward Compatible: Removal of optional fields

Full Compatible: Both directions work

Web Interface for Real-Time Monitoring

src/web/app.py - Simple Flask dashboard:

Complete Test Suite

src/tests/test_schema_evolution.py:

Docker Integration

docker/Dockerfile:

Build and Test Automation

scripts/build_and_test.sh:

One-Click Setup Script

setup_project.sh:

#!/bin/bash

echo "🏗️ Setting up Avro Log System..."

# Create complete project structure

mkdir -p avro-log-system/{src/{serializers,models,tests,schemas,web,validators},config,docker,scripts}

cd avro-log-system

# Generate all source files

cat > requirements.txt << 'EOF'

avro-python3==1.11.3

flask==3.0.3

pytest==8.2.0

pytest-asyncio==0.23.6

dataclasses-json==0.6.6

EOF

# Copy schemas, source files, tests (full implementation)

# Run build and test

chmod +x scripts/build_and_test.sh

./scripts/build_and_test.sh

echo "🎉 Project ready! Navigate to avro-log-system/ and run './scripts/build_and_test.sh'"

Build, Test & Verify Guide

Step 1: Environment Setup

# Expected output: Python 3.11+ detected

python --version

# Expected output: All dependencies installed successfully

pip install -r requirements.txt

Step 2: Schema Validation

# Expected output: ✅ All schemas valid and compatible

python -m src.validators.schema_validator

Step 3: Unit Tests

# Expected output: All tests passed, coverage > 90%

python -m pytest src/tests/ -v --cov=src

Step 4: Integration Testing

# Expected output: Schema evolution test suite passes

python -m pytest src/tests/test_integration.py -v

Step 5: Docker Deployment

# Expected output: Container running on port 5000

docker build -t avro-log-system .

docker run -p 5000:5000 avro-log-system

Complete Build, Test & Verification Guide

Avro Schema Evolution Log System - Day 17

This guide provides exact commands and expected outputs for building, testing, and verifying the Avro schema evolution system both natively and with Docker.

🎯 Prerequisites Check

Step 1: Verify System Requirements

Check for python, Docker

🏗️ Native Build Process (Without Docker)

Step 2: Project Setup

# Create and navigate to workspace

mkdir -p ~/workspace && cd ~/workspace

Expected Output:

# No output - command succeeds silently

# Download and run the setup script

curl -O https://raw.githubusercontent.com/your-repo/avro-setup/main/setup_project.sh

chmod +x setup_project.sh

./setup_project.shExpected Output:

🚀 Setting up Avro Schema Evolution Log System...

==================================================

[INFO] Checking prerequisites...

[SUCCESS] Prerequisites check passed

[INFO] Creating project structure...

[SUCCESS] Project structure created

[INFO] Creating requirements.txt...

[SUCCESS] Requirements file created

[INFO] Creating Avro schemas...

[SUCCESS] Avro schemas created (v1, v2, v3)

[INFO] Creating Python source files...

[SUCCESS] Python source files created

[INFO] Creating test suite...

[SUCCESS] Test suite created

[INFO] Creating Docker configuration...

[SUCCESS] Docker configuration created

[INFO] Creating build and test scripts...

[SUCCESS] Build and test scripts created

[INFO] Installing dependencies and running initial tests...

✅ Loaded 3 schema versions

✅ Test event processed: Event processed with schema v3. Size: 156 bytes...

[SUCCESS] Initial tests passed!

🎉 SETUP COMPLETE! 🎉

# Navigate to project directory

cd avro-log-system

# Verify project structure

ls -la

Expected Output:

total 48

drwxr-xr-x 8 user user 4096 May 27 10:00 .

drwxr-xr-x 3 user user 4096 May 27 10:00 ..

-rw-r--r-- 1 user user 523 May 27 10:00 docker-compose.yml

drwxr-xr-x 2 user user 4096 May 27 10:00 docker

-rw-r--r-- 1 user user 487 May 27 10:00 requirements.txt

drwxr-xr-x 2 user user 4096 May 27 10:00 scripts

drwxr-xr-x 6 user user 4096 May 27 10:00 src

Step 4: Install Dependencies

# Create virtual environment (recommended)

python3 -m venv venv

source venv/bin/activate # On Windows: venv\Scripts\activate

Expected Output:

# Prompt changes to show (venv)

(venv) user@machine:~/workspace/avro-log-system$

# Install Python dependencies

pip install -r requirements.txt

Expected Output:

Collecting avro-python3==1.11.3

Downloading avro_python3-1.11.3-py2.py3-none-any.whl (38 kB)

Collecting fastavro==1.9.4

Downloading fastavro-1.9.4-cp311-cp311-linux_x86_64.whl (3.0 MB)

Collecting flask==3.0.3

Downloading flask-3.0.3-py3-none-any.whl (101 kB)

...

Successfully installed avro-python3-1.11.3 fastavro-1.9.4 flask-3.0.3 ...

❌ If Failed: Check internet connection, try pip install --upgrade pip

Step 5: Schema Validation

# Validate Avro schemas

python -c "

import sys

sys.path.append('.')

from src.serializers.avro_handler import AvroSchemaManager

manager = AvroSchemaManager()

print(f'✅ Successfully loaded {len(manager.schemas)} schema versions')

for version, schema in manager.schemas.items():

print(f' {version}: {len(schema.fields)} fields')

"Expected Output:

✅ Loaded schema v1

✅ Loaded schema v2

✅ Loaded schema v3

✅ Successfully loaded 3 schema versions

v1: 4 fields

v2: 6 fields

v3: 8 fields

❌ If Failed: Check schema files exist in src/schemas/ directory

Step 6: Unit Tests

# Run complete test suite

python -m pytest src/tests/ -v --tb=short

Expected Output:

================================ test session starts

src/tests/test_schema_evolution.py::TestSchemaEvolution::test_serialization_size_efficiency PASSED [100%]

......................

========================= 7 passed, 0 failed, 0 warnings in 2.34s ========================

❌ If Failed: Check error details, ensure all dependencies installed

Step 7: Integration Tests

# Run integration tests

python -m pytest src/tests/test_integration.py -v

Expected Output:

================================ test session starts

src/tests/test_integration.py::TestWebIntegration::test_sample_generation_api PASSED [100%]

......

========================= 4 passed, 0 failed, 0 warnings in 1.87s Step 8: Coverage Report

# Run tests with coverage

python -m pytest src/tests/ --cov=src --cov-report=html --cov-report=termExpected Output:

================================ test session starts =================================

... test results ...

---------- coverage: platform linux, python 3.11.x -----------

Name Stmts Miss Cover

-----------------------------------------------------------

src/__init__.py 0 0 100%

src/models/__init__.py 0 0 100%

src/models/log_event.py 67 3 95%

src/serializers/__init__.py 0 0 100%

src/serializers/avro_handler.py 142 8 94%

src/web/app.py 78 5 94%

-----------------------------------------------------------

TOTAL 287 16 94%✅ Success Criteria: Coverage > 90%

Step 9: Schema Compatibility Testing

# Test schema evolution compatibilityExpected Output:

🧪 Testing Schema Compatibility...

✅ v1: Schema compatibility verified

✅ v2: Schema compatibility verified

✅ v3: Schema compatibility verified

📊 Compatibility Results: 9/9 tests passed

Step 11: Web Dashboard Testing

# Start the web dashboard (in background)

python -m src.web.app &

Expected Output:

🌐 Starting Avro Schema Evolution Dashboard...

📊 Access dashboard at: http://localhost:5000

* Running on all addresses (0.0.0.0)

* Running on http://127.0.0.1:5000

* Running on http://192.168.1.100:5000

# Test API endpoints

curl -s http://localhost:5000/api/schema-info | python -m json.toolExpected Output:

{

"status": "success",

"data": {

"available_schemas": ["v1", "v2", "v3"],

"compatibility_matrix": {

"v1": ["v1"],

"v2": ["v1", "v2"],

"v3": ["v1", "v2", "v3"]

............# Test compatibility endpoint

curl -s -X POST http://localhost:5000/api/test-compatibility \

-H "Content-Type: application/json" \

-d '{"schema_version": "v3"}' | python -m json.tool

Expected Output:

{

"status": "success",

"message": "Event processed with schema v3. Size: 156 bytes",

"sample_data": {

"timestamp": "2025-05-27T10:30:00.123456+00:00",

"level": "INFO",

............# Stop the web server

pkill -f "python -m src.web.app"Step 12: Performance Benchmarking

# Run performance benchmarkExpected Output:

⚡ Performance Benchmark

==============================

📊 Schema v1:

Serialization: 0.087s

Deserialization: 0.129s

Avg Size: 67.3 bytes

Throughput: 4630 events/sec

📊 Schema v2:

Serialization: 0.093s

Deserialization: 0.138s

Avg Size: 92.8 bytes

Throughput: 4329 events/sec

📊 Schema v3:

Serialization: 0.101s

Deserialization: 0.147s

Avg Size: 131.5 bytes

Throughput: 4032 events/sec

🎯 Performance Results:

All schemas: > 3000 events/sec ✅

Size efficiency: v1 < v2 < v3 ✅

Latency: < 1ms per event ✅

🐳 Docker Build Process

Step 13: Docker Image Build

# Build Docker image

docker build -f docker/Dockerfile -t avro-log-system .

Expected Output:

[+] Building 45.2s (12/12) FINISHED

=> [internal] load build definition from Dockerfile

=> [internal] load .dockerignore

=> [internal] load metadata for docker.io/library/python:3.11-slim

=> [1/7] FROM docker.io/library/python:3.11-slim@sha256:xxx

=> [internal] load build context

=> [2/7] WORKDIR /app

=> [3/7] RUN apt-get update && apt-get install -y gcc && rm -rf /var/lib/apt/lists/*

=> [4/7] COPY requirements.txt .

=> [5/7] RUN pip install --no-cache-dir -r requirements.txt

=> [6/7] COPY src/ ./src/

=> [7/7] COPY config/ ./config/

=> exporting to image

=> naming to docker.io/library/avro-log-system

# Verify image was created

docker images | grep avro-log-system

Expected Output:

avro-log-system latest a1b2c3d4e5f6 2 minutes ago 145MB

Step 14: Docker Container Testing

# Run container in detached mode

docker run -d -p 5000:5000 --name avro-test avro-log-system

Expected Output:

a1b2c3d4e5f6789012345678901234567890123456789012345678901234567890

# Check container status

docker ps | grep avro-testExpected Output:

a1b2c3d4e5f6 avro-log-system "python -m src.web.app" 30 seconds ago Up 29 seconds 0.0.0.0:5000->5000/tcp avro-test# Test container health

docker logs avro-testExpected Output:

✅ Loaded schema v1

✅ Loaded schema v2

✅ Loaded schema v3

🌐 Starting Avro Schema Evolution Dashboard...

📊 Access dashboard at: http://localhost:5000

* Running on all addresses (0.0.0.0)

* Running on http://127.0.0.1:5000# Test API through Docker

curl -s http://localhost:5000/api/schema-info | python -c "import sys,json; data=json.load(sys.stdin); print('✅ API working' if data['status']=='success' else '❌ API failed')"Expected Output:

✅ API working# Check container resource usage

docker stats avro-test --no-streamExpected Output:

CONTAINER ID NAME CPU % MEM USAGE / LIMIT MEM % NET I/O BLOCK I/O PIDS

a1b2c3d4e5f6 avro-test 0.12% 42.5MiB / 7.775GiB 0.53% 1.2kB / 656B 0B / 0B 5✅ Success Criteria: Memory < 100MB, CPU < 5%

Step 15: Docker Compose Testing

# Stop individual container

docker stop avro-test && docker rm avro-test# Start with Docker Compose

docker-compose up -dExpected Output:

Creating network "avro-log-system_default" with the default driver

Creating avro-log-system_avro-log-system_1 ... done# Check compose services

docker-compose psExpected Output:

Name Command State Ports

------------------------------------------------------------------------------------------------

avro-log-system_avro-log-system_1 python -m src.web.app Up 0.0.0.0:5000->5000/tcp

# Test full system through compose

curl -s -X POST http://localhost:5000/api/test-compatibility \

-H "Content-Type: application/json" \

-d '{"schema_version": "v2"}' | \

python -c "import sys,json; data=json.load(sys.stdin); print('✅ Full system working' if data['status']=='success' else '❌ System failed')"Expected Output:

✅ Full system working

Step 16: Docker Health Check

# Check container health status

docker inspect avro-log-system_avro-log-system_1 | grep -A 5 '"Health"'Expected Output:

"Health": {

"Status": "healthy",

"FailingStreak": 0,

"Log": [

{

"Start": "2025-05-27T10:30:00.123456789Z",# View application logs

docker-compose logs avro-log-systemExpected Output:

avro-log-system_1 | ✅ Loaded schema v1

avro-log-system_1 | ✅ Loaded schema v2

avro-log-system_1 | ✅ Loaded schema v3

avro-log-system_1 | 🌐 Starting Avro Schema Evolution Dashboard...

avro-log-system_1 | 📊 Access dashboard at: http://localhost:5000

avro-log-system_1 | * Running on all addresses (0.0.0.0)🧪 Complete System Verification

Step 17: End-to-End Testing

# Run comprehensive system test

python -c "

import sys, requests, json, time

sys.path.append('.')

print('🎯 End-to-End System Verification')

print('=' * 40)

base_url = 'http://localhost:5000'

tests_passed = 0

total_tests = 0

# Test 1: Schema Info API

try:

response = requests.get(f'{base_url}/api/schema-info', timeout=5)

assert response.status_code == 200

data = response.json()

assert data['status'] == 'success'

assert len(data['data']['available_schemas']) == 3

print('✅ Test 1: Schema Info API - PASSED')

tests_passed += 1

except Exception as e:

print(f'❌ Test 1: Schema Info API - FAILED: {e}')

total_tests += 1

# Test 2: Compatibility Testing API

try:

for version in ['v1', 'v2', 'v3']:

response = requests.post(f'{base_url}/api/test-compatibility',

json={'schema_version': version}, timeout=5)

assert response.status_code == 200

data = response.json()

assert data['status'] == 'success'

print('✅ Test 2: Compatibility API - PASSED')

tests_passed += 1

except Exception as e:

print(f'❌ Test 2: Compatibility API - FAILED: {e}')

total_tests += 1

# Test 3: Sample Generation API

try:

for version in ['v1', 'v2', 'v3']:

response = requests.get(f'{base_url}/api/generate-sample/{version}', timeout=5)

assert response.status_code == 200

data = response.json()

assert data['status'] == 'success'

assert data['schema_version'] == version

print('✅ Test 3: Sample Generation API - PASSED')

tests_passed += 1

except Exception as e:

print(f'❌ Test 3: Sample Generation API - FAILED: {e}')

total_tests += 1

# Test 4: Dashboard UI

try:

response = requests.get(f'{base_url}/', timeout=5)

assert response.status_code == 200

assert b'Avro Schema Evolution Dashboard' in response.content

print('✅ Test 4: Dashboard UI - PASSED')

tests_passed += 1

except Exception as e:

print(f'❌ Test 4: Dashboard UI - FAILED: {e}')

total_tests += 1

print(f'\n📊 System Verification Results:')

print(f' Tests Passed: {tests_passed}/{total_tests}')

print(f' Success Rate: {(tests_passed/total_tests)*100:.1f}%')

if tests_passed == total_tests:

print('🎉 ALL TESTS PASSED - System is fully operational!')

else:

print('⚠️ Some tests failed - Check logs for details')

"

Expected Output:

🎯 End-to-End System Verification

========================================

✅ Test 1: Schema Info API - PASSED

✅ Test 2: Compatibility API - PASSED

✅ Test 3: Sample Generation API - PASSED

✅ Test 4: Dashboard UI - PASSED

📊 System Verification Results:

Tests Passed: 4/4

Success Rate: 100.0%

🎉 ALL TESTS PASSED - System is fully operational!

Step 18: Load Testing (Optional)

# Simple load test using curl

echo "🚀 Load Testing..."

for i in {1..10}; do

curl -s -o /dev/null -w "Request $i: %{http_code} - %{time_total}s\n" \

http://localhost:5000/api/schema-info

doneExpected Output:

🚀 Load Testing...

Request 1: 200 - 0.045s

Request 2: 200 - 0.023s

Request 3: 200 - 0.019s

Request 4: 200 - 0.021s

Request 5: 200 - 0.018s

Request 6: 200 - 0.020s

Request 7: 200 - 0.017s

Request 8: 200 - 0.019s

Request 9: 200 - 0.016s

Request 10: 200 - 0.018s✅ Success Criteria: All requests return 200, response time < 0.1s

🔍 Troubleshooting Common Issues

Issue 1: Schema Loading Errors

Symptom:

FileNotFoundError: [Errno 2] No such file or directory: 'src/schemas/log_event_v1.avsc'Solution:

# Check schema files exist

ls -la src/schemas/

# Expected: log_event_v1.avsc, log_event_v2.avsc, log_event_v3.avsc

# If missing, re-run setup

./setup_project.shIssue 2: Port Already in Use

Symptom:

OSError: [Errno 48] Address already in useSolution:

# Find process using port 5000

lsof -i :5000

# Kill the process (replace PID)

kill -9 <PID>

# Or use different port

export FLASK_RUN_PORT=5001

python -m src.web.appIssue 3: Docker Build Failures

Symptom:

ERROR: failed to solve: process "/bin/sh -c pip install -r requirements.txt" did not complete successfullySolution:

# Clear Docker cache and rebuild

docker system prune -f

docker build --no-cache -f docker/Dockerfile -t avro-log-system .

# Check requirements.txt format

cat requirements.txt

# Should have exact versions like: avro-python3==1.11.3Issue 4: Import Errors

Symptom:

ModuleNotFoundError: No module named 'src'Solution:

# Set PYTHONPATH

export PYTHONPATH="${PYTHONPATH}:$(pwd)"

# Or run from project root

cd avro-log-system

python -m pytest src/tests/Issue 5: API Connection Errors

Symptom:

requests.exceptions.ConnectionError: ('Connection aborted.', RemoteDisconnected())Solution:

# Check if server is running

ps aux | grep "python -m src.web.app"

# Start server if not running

python -m src.web.app &

# Wait for startup

sleep 5📈 Performance Benchmarks & Success Criteria

Native Environment Benchmarks

Metric Expected Value Success Criteria Schema Loading < 100ms ✅ All 3 schemas load Unit Tests < 5s ✅ All tests pass Integration Tests < 3s ✅ All APIs respond Serialization > 3000 events/sec ✅ Performance acceptable Memory Usage < 50MB ✅ Efficient resource usage API Response < 200ms ✅ Fast user experience

Docker Environment Benchmarks

Metric Expected Value Success Criteria Build Time < 2 minutes ✅ Reasonable build speed Container Size < 200MB ✅ Efficient image Startup Time < 10s ✅ Fast deployment Memory Usage < 100MB ✅ Container efficiency Health Check Pass ✅ Self-monitoring

🏁 Final Verification Checklist

✅ Native Build Verification

[ ] Python 3.9+ installed and accessible

[ ] All dependencies installed without errors

[ ] 3 Avro schemas loaded successfully

[ ] 7/7 unit tests pass

[ ] 4/4 integration tests pass

[ ] Coverage > 90%

[ ] Assignment solution runs successfully

[ ] Web dashboard accessible at http://localhost:5000

[ ] All API endpoints return correct responses

[ ] Performance benchmarks meet criteria

[ ] End-to-end tests pass 4/4

✅ Docker Build Verification

[ ] Docker image builds successfully

[ ] Container runs without errors

[ ] Health checks pass

[ ] API accessible through Docker

[ ] Docker Compose works correctly

[ ] Container resource usage acceptable

[ ] Load testing passes

[ ] All system verification tests pass

🎯 Quick Health Check Script

Save this as health_check.sh for future verification:

#!/bin/bash

echo "🏥 Avro System Health Check"

echo "=========================="

....🎉 Success Confirmation

If you've reached this point with all checkmarks completed, congratulations! You have successfully:

🏆 Built a Production-Ready System

Schema Evolution Engine: Handles backward/forward compatibility

Real-time Dashboard: Visual interface for testing and monitoring

Comprehensive Testing: 90%+ code coverage with integration tests

Docker Deployment: Production-ready containerization

Performance Optimization: > 3000 events/sec processing capability

🎓 Mastered Advanced Concepts

Avro Serialization: Binary format with schema evolution

Compatibility Patterns: Backward, forward, and full compatibility

System Architecture: Distributed log processing design

DevOps Integration: CI/CD pipeline with automated testing

Production Monitoring: Health checks and performance metrics

🚀 Industry-Ready Skills

Real-world Application: Same patterns used by Netflix, LinkedIn, Uber

Scale Preparation: System designed for millions of events/second

Evolution Strategy: Graceful handling of schema changes

Operational Excellence: Monitoring, testing, deployment automation

You've built something that many production systems rely on. You've gained skills that senior engineers at FAANG companies consider essential. Most importantly, you've learned to think about data evolution at scale—a mindset that will serve you throughout your career.

🔮 What's Next?

Tomorrow's lesson (Day 18) will integrate this Avro system with Apache Kafka, creating a complete distributed streaming architecture. You'll see how your schema evolution work becomes the foundation for building systems that process millions of real-time events while maintaining data consistency across hundreds of microservices.

Keep building, keep evolving! 🌟

Real-World Context: Where You'll See This

Avro with schema evolution powers some of the most demanding systems in tech:

LinkedIn: Their entire data pipeline uses Avro for schema management across hundreds of services

Netflix: Recommendation engine events evolve constantly without breaking downstream consumers

Uber: Real-time pricing and matching data schemas change frequently as they add new features

Complete Build and Verification Process

Here's your step-by-step verification checklist to ensure everything works perfectly:

Phase 1: Environment Setup ✅

# Expected output: Successfully created project

./setup_project.sh

# Expected output: ✅ All dependencies installed

cd avro-log-system && pip install -r requirements.txt

Phase 2: Schema Validation ✅

# Expected output: ✅ Loaded 3 schema versions

python -c "from src.serializers.avro_handler import AvroSchemaManager; print(f'✅ Loaded {len(AvroSchemaManager().schemas)} schemas')"

# Expected output: All compatibility tests pass

python assignment_solution.py

Phase 3: System Testing ✅

# Expected output: All tests pass with coverage report

./scripts/run_tests.sh

# Expected output: Dashboard available at http://localhost:5000

./scripts/build_and_test.sh

Phase 4: Docker Deployment ✅

# Expected output: Container builds and runs successfully

docker-compose up -d

# Expected output: {"status": "success", "data": {...}}

curl http://localhost:5000/api/schema-info

Real-World Impact: Why This Matters

Understanding Avro schema evolution isn't just academic—it's the foundation of how major tech companies handle data at scale:

Netflix: Their recommendation system processes billions of viewing events daily. When they add new engagement metrics, Avro ensures older consumers don't break while newer ones get rich data.

LinkedIn: Their entire data platform uses Avro for schema management. Every profile update, connection, or job posting flows through systems that demonstrate exactly what you built today.

Uber: Real-time pricing and matching data schemas evolve constantly as they add new cities, vehicle types, and features. Schema evolution prevents service outages during deployments.

You've just built the same capability that powers these systems! 🎯

Key Learning Achievements

By completing today's lesson, you've mastered:

✅ Schema Evolution Patterns: The three types of compatibility and when to use each ✅ Production-Ready Architecture: Built a system that can handle real-world schema changes

✅ Performance Optimization: Understanding serialization efficiency and monitoring ✅ Testing Strategies: Comprehensive test suite for evolution scenarios

✅ DevOps Integration: Docker deployment and CI/CD pipeline setup

Assignment Mastery Checkpoint

Your assignment solution demonstrates advanced understanding by:

Creating realistic business evolution scenarios (basic tracking → rich analytics)

Implementing both backward and forward compatibility

Performance testing across schema versions

Real-world data patterns that mirror actual e-commerce/SaaS platforms

This level of implementation puts you ahead of many junior developers and demonstrates senior-level system design thinking.

Advanced Challenge (Optional)

Ready to push further? Try these advanced scenarios:

Schema Registry Integration: Connect to Confluent Schema Registry

Multi-Language Support: Generate Java/Go clients from your Avro schemas

Stream Processing: Integrate with Apache Kafka Streams for real-time processing

Data Lake Integration: Store evolved schemas in Apache Parquet for analytics

Keep building, keep evolving, and remember: the best systems are designed to change gracefully! 🚀