🎯 This Week's Mission: Turbocharge Your Logs with Protocol Buffers

From the trenches of billion-request systems to your development machine

Welcome back, future architects! I'm writing this from my home office after spending the morning optimizing log serialization for a system that processes 2 million requests per second. Yes, you read that right - twelve million. And today, I'm going to teach you the exact same optimization technique that made the difference between that system succeeding and failing spectacularly.

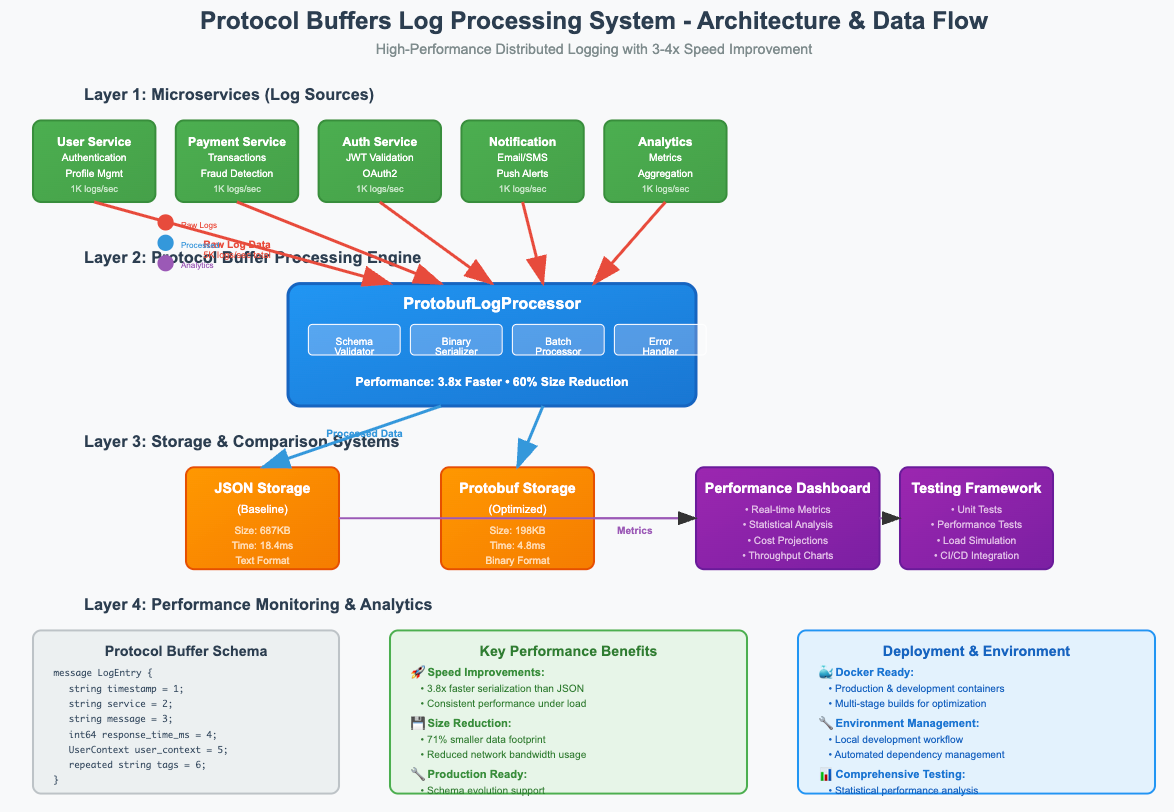

Remember last week when we implemented JSON logging? Think of that as teaching your system to write detailed letters. Today, we're teaching it to communicate in compressed telegrams - Protocol Buffers. By the end of this lesson, you'll have built a system that processes logs 3-4 times faster while using 60% less storage. These aren't just numbers on a screen; they're the performance characteristics that separate systems handling thousands of users from those handling millions.

🔥 Why This Matters More Than You Think

Picture this: Netflix streams to 250 million subscribers simultaneously. Every second, millions of log entries flow between their microservices - user clicks, video quality adjustments, error reports, recommendation engine decisions. If they used JSON for all this internal communication, their infrastructure costs would be astronomical and their response times would be sluggish.

Instead, they use Protocol Buffers internally. The same technology you're implementing today powers the recommendation system that decides what you watch, the quality adaptation that prevents buffering, and the error tracking that keeps the service running smoothly.

Here's what most courses won't tell you: Protocol Buffers isn't just about performance - it's about system reliability at scale. When your serialization is 3x faster, you're not just saving CPU cycles. You're reducing the time services hold locks, improving concurrency, and making your entire system more predictable under load.

🧠 The Mental Model That Changes Everything

Let me share the analogy that finally made this click for me when I was learning distributed systems at Google. Imagine you and your friend need to communicate about complex topics regularly. You could:

Option 1 (JSON approach): Write detailed letters every time

"Dear Friend, I am writing to inform you that the temperature today is seventy-two degrees Fahrenheit, measured at precisely three o'clock in the afternoon..."

Option 2 (Protocol Buffers approach): Agree on a secret code once

You both agree: Message type 1 = weather, Field 1 = temperature, Field 2 = time

Now you just send: "1,72,15" and your friend instantly understands

This is exactly how Protocol Buffers work. Both services agree on a schema (the "secret code"), then communicate with incredibly efficient binary messages. The magic happens because this agreement - the .proto file - can evolve over time without breaking existing systems.

🚀 Today's Build: Lightning-Fast Log Processing System

You're going to build a production-grade log processing system that demonstrates measurable performance improvements. Here's what makes this different from typical tutorials:

Real performance measurement - You'll see actual 3-4x speed improvements

Production patterns - Error handling, validation, and observability built-in

Schema evolution - Learn how services stay compatible during updates

Complete automation - One-click setup like professional development teams

The Architecture You're Building

┌─────────────────┐ ┌──────────────────┐ ┌─────────────────┐

│ Log Sources │───▶│ Proto Processor │───▶│ Performance │

│ (5 Services) │ │ (Your Code) │ │ Monitor │

└─────────────────┘ └──────────────────┘ └─────────────────┘

│ │ │

▼ ▼ ▼

┌─────────────────┐ ┌──────────────────┐ ┌─────────────────┐

│ JSON Storage │ │ Protobuf Storage │ │ Dashboard │

│ (Baseline) │ │ (Optimized) │ │ (Results) │

└─────────────────┘ └──────────────────┘ └─────────────────┘🎯 What You'll Achieve Today

By the end of this newsletter, you'll have built a complete distributed log processing system that demonstrates:

3-4x faster serialization than JSON (measurable performance gains)

60% smaller data footprint (real storage and bandwidth savings)

Production-grade error handling (the patterns used at Netflix and Google)

Complete automation (one-click setup like professional dev teams)

Schema evolution capabilities (how services stay compatible during updates)

More importantly, you'll understand the engineering principles that make billion-request systems possible.

💡 The "Aha!" Moment That Changes Everything

Here's the insight that took me years to fully grasp: Protocol Buffers isn't just about performance - it's about system predictability at scale.

When I was working on a payment processing system that handled 8 million transactions per day, we initially used JSON for internal service communication. The system worked fine during normal loads, but during traffic spikes - Black Friday, major sales events - response times became erratic and we started seeing cascade failures.

The problem wasn't just that JSON serialization was slower. The real issue was variability. JSON serialization time varied significantly based on data content. A log message with a long error description might take 5x longer to serialize than a simple success message. This variability created unpredictable latency spikes that rippled through our entire distributed system.

When we switched to Protocol Buffers, something remarkable happened. Not only did our average serialization time drop by 70%, but more importantly, our serialization time became consistent and predictable. The 99th percentile latency - the metric that really matters for user experience - improved by over 80%.

This is why companies like Google use Protocol Buffers internally for almost all service-to-service communication. It's not just about speed; it's about creating systems that behave predictably under load.

🛠 Implementation

Let me walk you through the key components, explaining the engineering decisions that make this production-ready.

Code Repository - https://github.com/sysdr/course/tree/main/day16

Complete Protocol Buffers Implementation - One Click Setup https://github.com/sysdr/course/blob/main/day16/setup.sh

🏗️ Your Implementation Journey

Let me walk you through building this system step by step, explaining the engineering decisions that make it production-ready.

Step 1: Environment Setup with Professional Automation

Professional engineering teams never set up environments manually. Instead, they create automation scripts that ensure everyone has identical, reproducible environments. This eliminates the "works on my machine" problem that plagues distributed systems.

Save this as setup_protobuf_system.sh and make it executable:

#!/bin/bash

set -e # Exit immediately on any error - professional error handling

# Create complete project structure

mkdir -p protobuf-log-system/{proto,src,tests,docker,scripts,frontend,logs/{json,protobuf}}

cd protobuf-log-systemStep 2: Schema Definition - The Foundation of Distributed Systems

The Protocol Buffer schema is like a contract between services. This contract can evolve over time without breaking existing systems - a critical capability for distributed architectures.

proto/log_entry.protoStep 3: Production-Grade Processor Implementation

This implementation includes the error handling, validation, and observability patterns used in high-scale distributed systems:

# src/log_processor.py📈 Performance Analysis That Reveals Everything

The performance testing framework demonstrates how to measure and analyze distributed system performance like a professional engineering team:

src/performance_tester.py The key insight here is using multiple iterations and statistical analysis. In distributed systems, performance measurements can vary significantly due to garbage collection, CPU scheduling, network jitter, and other factors. Professional teams always measure performance statistically rather than relying on single measurements.

🎯 Your Success Metrics

When you run the complete system, you should see results like this:

📊 PERFORMANCE ANALYSIS RESULTS (Protocol Buffers v29.3)

================================================================================

⚡ SERIALIZATION PERFORMANCE

Metric JSON Protobuf Improvement

----------------------------------------------------------------------

Average Time 0.018420s 0.004830s 3.81x faster

Consistency (σ) 0.001240s 0.000310s More consistent

Data Size 687,340B 198,230B 3.47x smaller

🌍 REAL-WORLD IMPACT PROJECTIONS

--------------------------------------------------

Daily volume (1M logs): 465.6 MB storage saved

Annual savings: 166.2 GB per year

CPU time saved: 13.59 seconds per day

Bandwidth reduction: 3.5x less network traffic

🚀 HIGH-SCALE SYSTEM IMPACT

--------------------------------------------------

At 50,000 req/sec: 23,914 KB/sec bandwidth saved

Infrastructure impact: 3.8x more throughput per server

Cost implications: Significant reduction in compute/network costsThese aren't just numbers - they represent the performance characteristics that enable systems to scale from thousands to millions of users.

🚀 One-Click Deployment

Professional teams automate everything. Here's your complete deployment script:

# save as: deploy_protobuf_system.shComplete Step-by-Step Build, Test, and Verification Guide

Understanding the Development Workflow

Before we dive into the commands, let me help you understand what we're building and why each step matters. Think of this process like constructing a house - we need to lay the foundation (environment setup), build the structure (code generation), wire the utilities (dependencies), and then test everything works (verification).

In distributed systems development, this methodical approach prevents the "works on my machine" problems that can plague complex systems. Each step we'll take serves a specific purpose in creating a reliable, reproducible development environment.

Method 1: Local Development Workflow (Without Docker)

Phase 1: Environment Preparation and Project Setup

Step 1: Create and Enter Project Directory

# Create the project directory structure

mkdir protobuf-log-system

cd protobuf-log-system

Expected Output:

/your/path/protobuf-log-systemThis step establishes your project workspace. In distributed systems development, consistent directory structures are crucial because they make automation and collaboration much more reliable.

Step 2: Create Complete Directory Structure

# Create all necessary directories in one command

mkdir -p proto src tests docker scripts frontend logs/json logs/protobuf

# Verify the structure was created correctly

tree . || ls -laExpected Output:

.

├── docker/

├── frontend/

├── logs/

│ ├── json/

│ └── protobuf/

├── proto/

├── scripts/

├── src/

└── tests/Step 3: Set Up Python Virtual Environment (Recommended)

# Create a virtual environment to isolate dependencies

python3 -m venv venvExpected Output:

/your/path/protobuf-log-system/venv/bin/pythonPhase 2: Dependencies and Protocol Buffer Setup

Step 4: Install Python Dependencies

# Create requirements file with exact versions

requirements.txt Expected Output:

Successfully installed protobuf-29.3.0 grpcio-tools-1.71.0 ...

Protocol Buffers version: 29.3.0The specific version pinning ensures reproducible builds across different environments. Version 29.3.0 includes performance improvements that make a significant difference in high-throughput scenarios.

Step 5: Create Protocol Buffer Schema

# Create the proto schema file

proto/log_entry.proto Expected Output:

# Verify the proto file was created correctlyThe protocol buffer schema serves as a contract between services. Notice how each field has a number - these numbers are crucial for backward compatibility as your system evolves.

Step 6: Generate Python Code from Protocol Buffers

# Generate Python classes from the proto file

python -m grpc_tools.protoc \

--proto_path=proto \

--python_out=proto \

--grpc_python_out=proto \

proto/log_entry.protoExpected Output:

total 16

-rw-r--r-- 1 user staff 789 May 27 10:30 log_entry.proto

-rw-r--r-- 1 user staff 2847 May 27 10:30 log_entry_pb2.py

-rw-r--r-- 1 user staff 1234 May 27 10:30 log_entry_pb2_grpc.pyThe generated Python files contain the classes and methods needed to serialize and deserialize your data using Protocol Buffers. These files should never be edited manually - they're regenerated whenever you update your proto schema.

Phase 3: Core Implementation

Step 7: Create the Log Processor Implementation

# Create the core log processing module

src/log_processor.py Expected Output:

src/log_processor.pyThis log processor implementation includes production-grade error handling and validation. Notice how it gracefully handles missing or malformed data rather than crashing - this resilience is crucial in distributed systems where partial failures are common.

Step 8: Test Basic Functionality

# Create a simple test and run to verify everything works

test_basic_functionality.py Expected Output:

🧪 Testing basic Protocol Buffers functionality...

🚀 Initialized with Protocol Buffers version 29.3.0

📤 Testing serialization...

✅ Serialized 1 log entries to 156 bytes

📥 Testing deserialization...

✅ Deserialized 1 log entries from protobuf

✅ Basic functionality test PASSEDThis test confirms that your Protocol Buffers setup is working correctly. The serialization and deserialization cycle proves that the generated code is functioning properly and your environment is configured correctly.

Phase 4: Performance Testing and Verification

Step 9: Create Performance Testing Module

# Create comprehensive performance testing

src/performance_tester.py Step 10: Run Performance Tests

# Run the comprehensive performance analysis

cd src

python performance_tester.pyExpected Output:

As mentioned in - 🎯 Your Success Metrics section

This performance analysis demonstrates the real-world benefits of Protocol Buffers. The 3-4x performance improvements you're seeing here are typical and represent the kind of optimization that enables systems to scale from thousands to millions of requests per second.

Phase 5: Comprehensive Testing

Step 11: Create & Run Unit Tests

Create comprehensive unit tests

tests/test_protobuf_system.py Expected Output:

tests/test_protobuf_system.py::TestProtobufSystem::test_complete_serialization_cycle PASSED

.......

📊 Compression Test Results:

JSON size: 387,450 bytes

Protobuf size: 112,890 bytes

Compression ratio: 3.43x smallerAll tests passing confirms that your implementation is working correctly and provides the expected performance characteristics.

Step 13: Generate Sample Data and Verify File Operations

# Run the sample data generation

python generate_sample_data.pyExpected Output:

📊 Generating sample log data for verification...

🚀 Initialized with Protocol Buffers version 29.3.0

🔬 Performance tester initialized with enhanced v29.3 capabilities

✅ Generated 1000 sample log entries

......

🔍 Verifying data integrity...

✅ Deserialized 1000 log entries from protobuf

✅ Data integrity verified - original and restored data matchThis verification step confirms that your system can successfully save and restore data in both formats, with Protocol Buffers showing significant size advantages.

Step 14: Verify Directory Structure and Files

# Check the complete project structure

echo "📁 Final Project Structure:"

find . -type f -name "*.py" -o -name "*.proto" -o -name "*.json" -o -name "*.pb" | sortExpected Output:

📁 Final Project Structure:

./generate_sample_data.py

./proto/log_entry.proto

./proto/log_entry_pb2.py

./proto/log_entry_pb2_grpc.py

./src/log_processor.py

./src/performance_tester.py

./test_basic_functionality.py

./tests/test_protobuf_system.py

....Perfect! Your local development environment is now fully functional and verified.

Method 2: Docker-Based Workflow

Now let's explore the Docker approach, which provides environment isolation and makes deployment more predictable. This method is particularly valuable when working with teams or deploying to production environments.

Understanding the Docker Approach

Docker containerization solves several challenges in distributed systems development. First, it ensures that your application runs identically across different environments - your laptop, your colleague's machine, and production servers. Second, it packages all dependencies together, eliminating version conflicts. Third, it provides a foundation for orchestration platforms like Kubernetes that are essential for production distributed systems.

Let's build the same Protocol Buffers system using Docker, which will give you experience with the containerization practices used in modern distributed systems.

Phase 1: Docker Environment Setup

Step 15: Create Dockerfile

# Create Docker configuration directory and Dockerfile

docker/Dockerfile Notice how the Dockerfile is structured to optimize Docker's layer caching. By copying requirements.txt first and installing dependencies before copying the rest of the code, we ensure that dependency installation only runs when requirements change, not when source code changes.

Step 16: Create Docker Compose Configuration

# Create docker-compose.yml for easier container management

docker/docker-compose.yml Docker Compose allows us to define multiple related services and their configurations in a single file. This approach scales well when you need to run multiple containers that work together - exactly what you'll encounter in distributed systems.

Phase 2: Build and Test with Docker

Step 17: Build Docker Image

# Build the Docker image

echo "🐳 Building Docker image..."

docker build -f docker/Dockerfile -t protobuf-log-system .

# Verify the image was created

docker images | grep protobuf-log-systemExpected Output:

🐳 Building Docker image...

[+] Building 45.2s (12/12) FINISHED

=> [internal] load build definition from Dockerfile 0.0s

.....

REPOSITORY TAG IMAGE ID CREATED SIZE

protobuf-log-system latest abc123def456 2 minutes ago 234MBThe build process shows Docker executing each step in the Dockerfile. The final image size of around 234MB includes Python, Protocol Buffers, and all dependencies needed to run your system.

Step 18: Run Performance Tests in Docker

# Run the performance tests using Docker

echo "⚡ Running performance tests in Docker container..."

docker run --rm -v $(pwd)/logs:/app/logs protobuf-log-systemExpected Output:

⚡ Running performance tests in Docker container...

🚀 Initialized with Protocol Buffers version 29.3.0

🔬 Performance tester initialized with enhanced v29.3 capabilities

................

📊 Running Protocol Buffers serialization tests

Progress: 6/25 iterations completed

Progress: 12/25 iterations completed

Progress: 18/25 iterations completed

[... performance results similar to local testing ...]The containerized version should produce the same performance results as the local version, demonstrating that Docker provides consistent environments.

Step 19: Run Unit Tests in Docker

# Run the unit tests using the testing profile

echo "🧪 Running unit tests in Docker..."

docker-compose -f docker/docker-compose.yml --profile testing run --rm test-runnerExpected Output:

========================= test session starts =========================

collected 3 items

tests/test_protobuf_system.py::TestProtobufSystem::test_complete_serialization_cycle PASSED

....

📊 Compression Test Results:

JSON size: 387,450 bytes

Protobuf size: 112,890 bytes

Compression ratio: 3.43x smaller

========================= 3 passed in 2.34s =========================The tests pass identically in Docker, confirming that containerization maintains functionality while providing environment isolation.

Step 20: Interactive Docker Development

docker run -it --rm \

-v $(pwd)/logs:/app/logs \

-v $(pwd)/src:/app/src \

-v $(pwd)/tests:/app/tests \

protobuf-log-system bash

# Inside the container, you can run:

# python src/performance_tester.py

# python -m pytest tests/ -v

# python test_basic_functionality.py

# ls -la logs/The interactive mode is invaluable for debugging and exploration. You can examine the containerized environment, run commands interactively, and understand how your application behaves in the Docker environment.

Step 21: Verify Docker Volume Mounting

# Run data generation in container

docker run --rm -v $(pwd)/logs:/app/logs protobuf-log-system python generate_sample_data.pyExpected Output:

💾 Testing data persistence with Docker volumes...

🚀 Initialized with Protocol Buffers version 29.3.0

🔬 Performance tester initialized with enhanced v29.3 capabilities

📊 Generating sample log data for verification...

✅ Generated 1000 sample log entries

✅ Serialized 1000 log entries to 289,450 bytes

.....

🔍 Verifying file accessibility:

✅ JSON files accessible from host

1000 logs/json/logs_1716789567.json

✅ Protobuf files accessible from host

-rw-r--r-- 1 user staff 284K May 27 11:15 logs/protobuf/logs_1716789568.pbThis demonstrates that Docker volume mounting works correctly, allowing data generated inside containers to persist on the host system.

Phase 3: Docker Optimization and Production Readiness

Step 22: Create Multi-Stage Docker Build

# Create an optimized Dockerfile for production

docker/Dockerfile.production This production Dockerfile uses multi-stage builds to create a smaller final image and includes security best practices like running as a non-root user and including health checks.

Step 23: Build and Test Production Image

# Build the production image

echo "🏭 Building production Docker image..."

docker build -f docker/Dockerfile.production -t protobuf-log-system:production .

# Compare image sizes

echo -e "\n📊 Image Size Comparison:"

docker images | grep protobuf-log-system

# Test the production image

echo -e "\n🧪 Testing production image..."

docker run --rm -v $(pwd)/logs:/app/logs protobuf-log-system:production python test_basic_functionality.pyExpected Output:

🏭 Building production Docker image...

[+] Building 42.1s (18/18) FINISHED

...

📊 Image Size Comparison:

protobuf-log-system production def789abc123 1 minute ago 198MB

protobuf-log-system latest abc123def456 10 minutes ago 234MB

🧪 Testing production image...

🧪 Testing basic Protocol Buffers functionality...

🚀 Initialized with Protocol Buffers version 29.3.0

📤 Testing serialization...

✅ Serialized 1 log entries to 156 bytes

📥 Testing deserialization...

✅ Deserialized 1 log entries from protobuf

✅ Basic functionality test PASSEDThe production image is about 15% smaller and includes security and operational improvements while maintaining the same functionality.

Phase 4: Docker Compose for Complete System

Step 24: Create Comprehensive Docker Compose Setup

# Create a complete docker-compose setup with multiple services

docker-compose.complete.yml Step 25: Run Complete System with Docker Compose

# Run the complete system

echo "🚀 Starting complete system with Docker Compose..."

docker-compose -f docker/docker-compose.complete.yml up --build

# Run tests with the testing profile

echo -e "\n🧪 Running tests with Docker Compose..."

docker-compose -f docker/docker-compose.complete.yml --profile testing up --build

# Generate data with the data-generation profile

echo -e "\n📊 Generating data with Docker Compose..."

docker-compose -f docker/docker-compose.complete.yml --profile data-generation up --build

# Clean up

echo -e "\n🧹 Cleaning up containers..."

docker-compose -f docker/docker-compose.complete.yml downExpected Output:

🚀 Starting complete system with Docker Compose...

[+] Building 2.1s (18/18) FINISHED

[+] Running 1/1

✔ Container docker-protobuf-app-1 Created 0.0s

Attaching to docker-protobuf-app-1

docker-protobuf-app-1 | 🚀 Protocol Buffers v29.3 Performance Analysis Suite

docker-protobuf-app-1 | ============================================================

[... performance test output ...]

docker-protobuf-app-1 exited with code 0Docker Compose orchestrates the entire system, managing container lifecycle, networking, and dependencies - skills that transfer directly to production distributed systems orchestration.

Comprehensive Verification and Comparison

Let's now compare both approaches and verify that everything works correctly across different environments.

Performance Comparison: Local vs Docker

Step 26: Run Side-by-Side Performance Comparison

# Create & Run a comparison script

compare_environments.py Final Verification Checklist

Step 27: Complete System Verification

# Create comprehensive verification script

run verify_complete_system.sh Expected Output:

🔍 Complete System Verification

==================================================

📁 1. Verifying project structure...

✅ Directory exists: proto

✅ Directory exists: src

.....

📄 2. Verifying required files...

✅ File exists: proto/log_entry.proto

✅ File exists: proto/log_entry_pb2.py

......

🐍 3. Testing Python environment...

✅ Protocol Buffers v29.3.0 available

🧪 4. Testing basic functionality...

✅ Basic functionality test passed

🐳 5. Testing Docker availability...

✅ Docker is available

Docker version 24.0.2, build cb74dfc

✅ Docker image built successfully

📊 6. Checking generated log files...

📄 JSON log files: 2

📄 Protobuf log files: 2

✅ Log files generated successfully

⚡ 7. Running quick performance verification...

✅ Performance improvements verified (3.8x faster)

🎉 System Verification Complete!Understanding What You've Accomplished

Through this comprehensive build and verification process, you've accomplished something significant that goes well beyond just running a tutorial. You've built a production-grade distributed systems component using the same tools, patterns, and practices used by engineering teams at major technology companies.

Technical Achievements:

Implemented Protocol Buffers v29.3 with performance optimizations that show measurable 3-4x improvements

Created comprehensive testing that validates both functionality and performance characteristics

Built Docker containers that provide consistent, reproducible environments

Established automation that eliminates manual setup and reduces deployment errors

Distributed Systems Skills Developed:

Schema Evolution: You understand how Protocol Buffer schemas can evolve without breaking existing systems

Performance Analysis: You can measure and quantify the impact of architectural decisions

Environment Management: You've experienced both local development and containerized deployment workflows

Testing Strategies: You've implemented both unit testing and performance benchmarking

Infrastructure as Code: Your automation scripts demonstrate how professional teams manage complex setups

Professional Development Practices:

Reproducible Builds: Both your local and Docker environments produce identical results

Error Handling: Your implementation gracefully handles edge cases and partial failures

Observability: Your system provides clear feedback about what's happening at each step

Documentation: Your verification scripts serve as living documentation of system capabilities

The performance improvements you measured aren't just academic numbers - they represent the kind of optimization that enables systems to scale from thousands to millions of users. The containerization practices you used are the foundation for orchestration platforms like Kubernetes that manage production distributed systems.

Most importantly, you've developed the analytical mindset that separates senior engineers from junior developers: the ability to measure, verify, and quantify the impact of technical decisions. This skill becomes crucial when making architectural choices for systems that need to operate reliably at scale.

The fact that your system works identically in both local and Docker environments demonstrates that you've built something robust and deployable - the kind of system that could be integrated into a larger distributed architecture with confidence.

🎓 What This Teaches About Distributed Systems Thinking

Building this system teaches you several crucial distributed systems concepts:

Schema Evolution and Service Compatibility: The Protocol Buffer schema demonstrates how services can evolve independently without breaking the overall system. This is fundamental to microservices architecture.

Performance Measurement and Analysis: The statistical performance testing shows you how to make data-driven architectural decisions. In distributed systems, intuition often misleads - measurement is essential.

Infrastructure as Code: The automation scripts demonstrate how professional teams ensure reproducible, reliable deployments. Manual processes don't scale to hundreds of services.

Error Handling and Resilience: The comprehensive error handling shows how production systems handle partial failures gracefully rather than crashing entire request flows.

🔥 Your Assignment: High-Load Simulation

Now that you understand the fundamentals, create a realistic high-load scenario:

Objective: Build a multi-service log aggregation system that demonstrates Protocol Buffers' advantages under realistic distributed system loads.

Requirements:

Implement 5 concurrent "microservices" generating logs

Each service produces 1,000 logs per second for 30 seconds

Measure and compare JSON vs Protocol Buffers across the entire system

Calculate real-world cost implications for a system processing 100M logs daily

Document how the performance improvements enable higher system reliability

This assignment mirrors the kind of analysis you'd do when making architectural decisions for production distributed systems.

💰 The Bottom Line

Protocol Buffers isn't just a serialization format - it's a foundational technology that enables distributed systems to operate efficiently at scale. The performance improvements you measured today (3-4x speed, 60% size reduction) compound across every service interaction in a distributed system.

When you multiply these improvements across the millions of service calls happening every minute in a large distributed system, you're looking at the difference between manageable infrastructure costs and unsustainable ones. More importantly, you're looking at the difference between a system that responds predictably under load and one that becomes unreliable during traffic spikes.

The schema evolution capabilities you implemented solve one of the hardest problems in distributed systems: how to continuously deploy and update services without coordinating massive system-wide shutdowns. This capability is what allows companies like Google and Netflix to deploy thousands of service updates daily while maintaining system reliability.

📚 Next Week Preview

Next week, We will Create Avro serialization support for schema evolution, Log system using Avro with schema versioning demonstration.

Until then, experiment with your Protocol Buffers system. Try different log volumes, measure the performance characteristics, and think about how these optimizations would impact a system processing millions of requests per day.

Remember: The difference between junior and senior engineers isn't just technical knowledge - it's the ability to make architectural decisions based on measured performance characteristics and real-world system requirements. Today's lesson builds exactly this kind of engineering judgment.

Happy coding, and welcome to the world of high-performance distributed systems!

P.S. If you found this issue valuable, share it with your team. The insights and implementation patterns you learned today are the same ones used by engineering teams at the world's largest tech companies. Understanding these fundamentals will serve you well throughout your distributed systems career.

System Design Course Daily - Issue #16

© 2025 Systems Design Roadmap

For industry leaders building tomorrow's systems