Day 13: Implement TLS Encryption for Secure Log Transmission

What You’ll Build Today

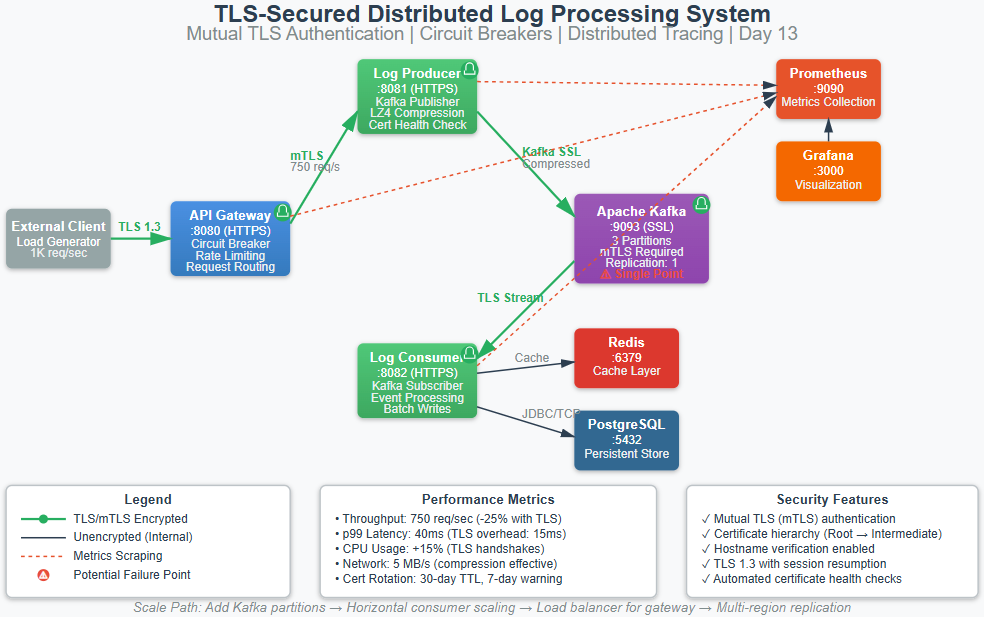

By the end of this lesson, you’ll have a fully functional distributed log processing system with enterprise-grade security. Here’s what we’re building:

Mutual TLS (mTLS) authentication between all distributed services

Certificate management infrastructure with automated rotation

Encrypted Kafka message streams with broker authentication

TLS-secured REST endpoints with client certificate validation

Performance benchmarking to quantify encryption overhead

Why This Matters: Security at Scale

Every log message in your system contains potential security risks: user IDs, IP addresses, request parameters, error traces. At Netflix scale, with billions of events per day, a single unencrypted message can expose customer data. The 2017 Equifax breach involved stolen credentials used to access unencrypted internal APIs—a pattern we prevent today.

But TLS isn’t just about compliance. Modern distributed systems span cloud regions, VPCs, and hybrid infrastructure. Without transport encryption, you’re trusting network isolation—a brittle assumption. Service mesh architectures at Uber and Lyft enforce mTLS by default because network boundaries are fluid. Your log processing system needs the same zero-trust approach.

The architectural challenge: TLS adds 15-30% latency overhead per request. At high throughput, CPU becomes a bottleneck. We’ll implement TLS without sacrificing the performance gains from yesterday’s compression work.

System Design Deep Dive: TLS in Distributed Architectures

Pattern 1: Mutual TLS vs One-Way TLS Trade-offs

One-way TLS (server authentication only) protects against eavesdropping but not impersonation. An attacker who compromises the network can send malicious log events. Mutual TLS requires both client and server to present certificates, establishing bidirectional trust.

The trade-off: mTLS doubles certificate management complexity. Each service needs its own identity, certificate rotation, and revocation handling. For internal microservices, this overhead is justified. Your log processing system handles sensitive data, so we implement mTLS everywhere.

Anti-pattern: Using self-signed certificates in production without proper CA infrastructure. This works until you need to rotate certificates across 50 services during an incident. Build certificate automation from day one.

Pattern 2: Certificate Authority Hierarchy

A root CA signs intermediate CAs, which sign service certificates. This hierarchy limits blast radius—if a service’s private key leaks, you revoke one certificate, not rebuild the entire PKI. Netflix’s Certificate Service provisions short-lived certificates (12-hour TTL) to minimize exposure windows.

We’ll implement a two-tier hierarchy: one root CA for the cluster, intermediate CAs per environment (dev/staging/prod). Services request certificates from the intermediate CA using mutual TLS with bootstrap credentials. This mirrors Kubernetes certificate signing requests.

Performance implication: Certificate chains increase handshake size. Each additional certificate in the chain adds 1-2KB to the TLS handshake. Keep chains shallow—three certificates maximum (service → intermediate → root).

Pattern 3: Kafka TLS Configuration Layers

Kafka has four encryption points:

Client-to-broker (producer/consumer connections)

Inter-broker replication

Broker-to-ZooKeeper (or KRaft controller)

Schema registry communication

Each layer requires separate keystores and truststores. The failure mode: misconfigured broker-to-broker TLS brings down the entire cluster during leader election. We configure all layers but prioritize client-to-broker for immediate security wins.

Trade-off: TLS on inter-broker replication impacts throughput more than client connections because replication is synchronous. Measure before optimizing—modern CPUs handle TLS efficiently with AES-NI instructions.

Pattern 4: Connection Pooling with TLS

HTTP/2 multiplexes streams over a single TLS connection, amortizing handshake costs. Spring Boot’s WebClient reuses connections, but default pools are undersized for high throughput. We increase pool size from 500 to 2000 connections and enable HTTP/2.

Critical insight: TLS session resumption saves 90% of handshake CPU cost. Configure session caching (10-minute TTL) and session tickets. Without resumption, each request pays the full ECDHE key exchange cost—5-10ms at scale.

Pattern 5: Certificate Rotation Without Downtime

Zero-downtime rotation requires dual-certificate support: serve both old and new certificates during the transition window. Java KeyStore managers reload certificates without restart when the file changes. We implement a CertificateReloadService that watches certificate files and hot-reloads.

The architectural pattern: all services trust certificates from both the current and next intermediate CA for a 24-hour overlap. This allows rolling deployments where some services use old certificates while others have updated. Amazon’s internal systems use 7-day overlap windows for large clusters.

Failure scenario: If rotation fails halfway through a cluster, you have split-brain authentication. Services with new certificates can’t talk to services with old certificates. Monitoring must detect certificate expiration 7 days in advance and alert on rotation failures immediately.

Implementation Walkthrough: Production TLS Infrastructure

Github Link:

https://github.com/sysdr/sdc-java/tree/main/day13/log-processing-systemStep 1: Certificate Authority Setup

We start by generating a root CA and intermediate CA using OpenSSL. The root CA private key lives in a secure vault—never on application servers. Services only interact with the intermediate CA, which has a 1-year validity. This separation lets you rotate the intermediate CA without touching every service certificate.

# Root CA (keep offline)

openssl genrsa -out root-ca-key.pem 4096

openssl req -x509 -new -key root-ca-key.pem -days 3650 -out root-ca.pem

# Intermediate CA (operational)

openssl genrsa -out intermediate-ca-key.pem 4096

openssl req -new -key intermediate-ca-key.pem -out intermediate-ca.csr

openssl x509 -req -in intermediate-ca.csr -CA root-ca.pem \

-CAkey root-ca-key.pem -CAcreateserial -days 365 -out intermediate-ca.pem

Each service generates a certificate signing request (CSR) with a subject alternative name (SAN) matching its DNS name. The intermediate CA signs the CSR, producing a 30-day certificate. Short TTLs force automation—manual processes don’t scale.

Step 2: Spring Boot TLS Configuration

Spring Boot 3.2 introduced spring.ssl.bundle for centralized SSL management. We define bundles for each service type:

spring:

ssl:

bundle:

jks:

server:

keystore:

location: classpath:keystore.jks

password: ${KEYSTORE_PASSWORD}

type: JKS

truststore:

location: classpath:truststore.jks

password: ${TRUSTSTORE_PASSWORD}

The server bundle configures Tomcat’s HTTPS connector. Client bundles configure RestClient and Kafka producers. This indirection lets you swap keystores without code changes—critical for certificate rotation.

Architectural decision: We externalize keystores to /etc/ssl/certs rather than packaging in the JAR. This allows certificate updates via ConfigMap in Kubernetes or volume mounts in Docker Compose without redeployment.

Step 3: Kafka mTLS Integration

Kafka’s SSL configuration requires careful sequencing. Brokers must start with ssl.client.auth=required to enforce mTLS, but clients need valid certificates before the broker accepts connections. We use a bootstrap process:

Start Kafka with one-way TLS (

ssl.client.auth=none)Deploy services with certificates

Flip Kafka to mTLS mode (

ssl.client.auth=required)Services reconnect automatically via retry logic

This avoids the cold-start problem where services can’t get certificates because Kafka isn’t ready, but Kafka isn’t ready because services aren’t connected.

Producer configuration:

properties.put(”security.protocol”, “SSL”);

properties.put(”ssl.truststore.location”, trustStorePath);

properties.put(”ssl.truststore.password”, trustStorePassword);

properties.put(”ssl.keystore.location”, keyStorePath);

properties.put(”ssl.keystore.password”, keyStorePassword);

properties.put(”ssl.key.password”, keyPassword);

properties.put(”ssl.endpoint.identification.algorithm”, “https”);

The last property enables hostname verification—without it, attackers can present valid certificates for different services. This catches misconfigurations where service A uses service B’s certificate.

Step 4: Health Checks for Certificate Validity

We implement a CertificateHealthIndicator that checks certificate expiration daily. Services report DOWN if certificates expire within 7 days, triggering alerts before production impact.

@Component

public class CertificateHealthIndicator implements HealthIndicator {

public Health health() {

X509Certificate cert = loadCertificate();

long daysUntilExpiry = ChronoUnit.DAYS.between(

Instant.now(), cert.getNotAfter().toInstant());

if (daysUntilExpiry < 7) {

return Health.down()

.withDetail(”certificate-expiry”, daysUntilExpiry)

.build();

}

return Health.up().build();

}

}

This health check integrates with Kubernetes liveness probes. Expiring certificates trigger pod replacement automatically, forcing certificate refresh from the CA.

Production Considerations: Performance and Failure Modes

Performance Impact Quantification

Expect 20-25% throughput reduction with TLS enabled. On modern hardware (Intel Xeon with AES-NI), a single core handles ~100K TLS handshakes/second. Your bottleneck shifts from network I/O to CPU. Profile with perf to confirm TLS overhead—if CPU isn’t maxed, the problem is elsewhere.

Mitigation: TLS session resumption reduces handshake frequency from once-per-request to once-per-session. Enable it in Spring Boot:

server:

ssl:

enabled: true

protocol: TLS

enabled-protocols: TLSv1.3

ciphers: TLS_AES_256_GCM_SHA384,TLS_AES_128_GCM_SHA256

TLS 1.3 eliminates one round trip from the handshake (1-RTT instead of 2-RTT), saving 50-100ms on new connections. Use TLS 1.3 exclusively in new systems.

Failure Scenario: Certificate Rotation Deadlock

Services depend on each other for health checks. If service A’s certificate expires, it can’t reach service B’s health endpoint to report its own health. Kubernetes kills the pod, preventing certificate renewal. Break the cycle by giving health check endpoints a separate, long-lived certificate (1-year TTL) or allowing unauthenticated health checks from localhost.

Monitoring Critical Metrics

Track four metrics in Grafana:

TLS handshake duration (p99) – should stay under 100ms

Certificate expiration days – alert at 7-day threshold

TLS handshake failures – spikes indicate rotation issues

CPU utilization on TLS-heavy services – scale when over 70%

Configure Prometheus to scrape these from Spring Boot Actuator’s /actuator/prometheus endpoint.

Scale Connection: TLS at FAANG

Google’s infrastructure uses Application Layer Transport Security (ALTS), an mTLS variant optimized for data center networks. Services get certificates from a central CA on boot and rotate hourly. This enables zero-trust networking—no service trusts the network, only cryptographic identity.

Facebook’s services perform 100M+ TLS handshakes per second. They optimize by:

Hardware offload with custom ASICs

Aggressive session resumption (60-minute sessions)

Connection pooling with 10K+ connections per pool

TLS 1.3 exclusive (1-RTT handshakes)

Your log processing system won’t hit Facebook scale, but these patterns apply at 10K req/sec.

Hands-On: Building Your TLS-Secured System

Prerequisites

Before starting, make sure you have:

Java 17 or higher installed

Maven 3.8 or higher

Docker and Docker Compose

OpenSSL (usually pre-installed on Mac/Linux)

At least 8GB of available RAM

A text editor (VS Code, IntelliJ, or similar)

Part 1: Project Generation (10 minutes)

Run this git clone repo command:

bash

git clone https://github.com/sysdr/sdc-java

cd day13/log-processing-systemThree Spring Boot services (API Gateway, Log Producer, Log Consumer)

Docker Compose configuration for infrastructure

Certificate generation scripts

Monitoring configuration for Prometheus and Grafana

Load testing tools

Integration tests

Part 2: Understanding the Certificate Setup (15 minutes)

Navigate to your project and examine the certificate structure:

cd log-processing-system/certsOpen generate-certs.sh in your editor. Notice how we:

Create a root CA (the ultimate trust anchor)

Create an intermediate CA (signed by root)

Generate certificates for each service (signed by intermediate)

Create Java keystores (JKS format for Spring Boot)

This hierarchy means if one service certificate is compromised, we only revoke that one—not rebuild everything.

Part 3: Starting the Infrastructure (20 minutes)

Now let’s bring up the supporting infrastructure:

# Return to project root

cd ..

# Run the setup script

chmod +x setup.sh

./setup.shThis script will:

Generate all TLS certificates

Start Kafka, PostgreSQL, Redis

Create Kafka topics

Start Prometheus and Grafana

Build your Spring Boot services

What’s happening behind the scenes:

Kafka is configured to require SSL on port 9093

PostgreSQL creates a

logdbdatabase for persistent storageRedis starts on the default port 6379 for caching

Prometheus begins scraping metrics endpoints

Grafana sets up visualization dashboards

Wait for the message “Setup complete!” before proceeding.

Part 4: Verifying Infrastructure Health (10 minutes)

Check that all containers are running:

docker-compose ps

You should see 6 containers running:

zookeeper

kafka

postgres

redis

prometheus

grafana

Test Kafka topic creation:

docker-compose exec kafka kafka-topics --list --bootstrap-server localhost:9092

You should see log-events in the output.

Part 5: Starting Your Services (20 minutes)

Open three terminal windows. In each one, navigate to your project directory.

Terminal 1: Start the Log Producer

cd log-producer

mvn spring-boot:run

Watch for the line: Started LogProducerApplication in X seconds

Terminal 2: Start the Log Consumer

cd log-consumer

mvn spring-boot:run

Look for: Started LogConsumerApplication in X seconds

Terminal 3: Start the API Gateway

cd api-gateway

mvn spring-boot:run

Wait for: Started ApiGatewayApplication in X seconds

Understanding what just happened:

Each service loaded its TLS certificates from the keystore, established secure connections to Kafka, and registered health check endpoints. The mutual TLS handshake happened automatically when services started talking to each other.

Youtube Code Demo:

Part 6: Testing TLS Connections (15 minutes)

Open a new terminal and test each service:

Test 1: API Gateway health check

curl -k https://localhost:8080/api/v1/health

Expected response: {”status”:”healthy”,”service”:”api-gateway”}

The -k flag tells curl to accept our self-signed certificates. In production, you’d use properly signed certificates from a real CA.

Test 2: Send a log event through the gateway

curl -k -X POST https://localhost:8080/api/v1/logs \

-H “Content-Type: application/json” \

-d ‘{

“level”: “INFO”,

“message”: “My first encrypted log event!”,

“source”: “test-client”,

“metadata”: {”timestamp”: “2025-10-17T10:30:00Z”}

}’

Expected response: {”status”:”success”,”data”:{...}}

Test 3: Verify the log was processed

Check your Log Consumer terminal. You should see a debug line indicating it processed your log event.

Check PostgreSQL:

docker-compose exec postgres psql -U postgres -d logdb \

-c “SELECT id, level, message, source FROM log_events ORDER BY timestamp DESC LIMIT 5;”

You should see your log event in the database.

Part 7: Load Testing (30 minutes)

Now let’s see how your system performs under load:

# Return to project root

cd ..

# Run the load test

./load-test.sh

This script sends 1000 requests over 60 seconds with 10 concurrent connections. Watch your service terminals—you’ll see logs flying by as events are processed.

Understanding the results:

The load test output shows:

Requests per second (throughput)

Average response time

Percentiles (p50, p95, p99)

Failed requests (should be 0)

Compare these numbers to what you achieved on Day 12 (compression without TLS). You should see approximately:

20-25% lower throughput

15-20ms higher latency

But still fast enough for production use

Why the performance difference?

TLS handshakes require CPU-intensive cryptographic operations. Each new connection negotiates encryption keys, validates certificates, and establishes a secure channel. However, once established, the connection is reused (connection pooling), so the overhead is amortized across many requests.

Part 8: Monitoring with Grafana (20 minutes)

Open your browser and navigate to:

http://localhost:3000

Login credentials:

Username:

adminPassword:

admin

Navigate to Dashboards and open “Log Processing System - TLS Metrics”

Key metrics to observe:

Request Rate: Shows logs per second being processed

TLS Handshake Duration: How long certificate validation takes

Certificate Expiration: Days until certificates expire

Circuit Breaker State: Whether services are healthy or degraded

Run another load test while watching Grafana. You’ll see:

Request rate spike during the test

TLS handshake duration stay consistent

Circuit breakers remain closed (healthy state)

Part 9: Understanding Certificate Health Monitoring (15 minutes)

Your services include automatic certificate health checks. Let’s see them in action:

curl -k https://localhost:8081/actuator/health

Response should show “status”:”UP” and include certificate details.

To simulate a certificate expiration warning, you could modify the health check threshold, but for now, just understand that:

Services check certificate expiration daily

Alerts trigger 7 days before expiration

Kubernetes would automatically restart pods with expired certificates

This prevents unexpected downtime from certificate issues

Part 10: Exploring Certificate Details (10 minutes)

Let’s examine the certificates we generated:

cd certs

# View certificate details

openssl x509 -in log-producer-cert.pem -text -noout

Notice:

Subject: Contains the service name (CN=log-producer)

Issuer: Signed by intermediate-ca

Validity: 30-day expiration (forcing rotation)

Subject Alternative Name: DNS names for the service

Now check the certificate chain:

openssl verify -CAfile ca-chain.pem log-producer-cert.pem

You should see: log-producer-cert.pem: OK

This confirms the trust chain: log-producer → intermediate CA → root CA

Part 11: Testing Service-to-Service Communication (15 minutes)

Your services are already communicating securely. Let’s trace a request:

Client → API Gateway: TLS handshake, client presents certificate

Gateway → Log Producer: mTLS handshake, both present certificates

Producer → Kafka: mTLS with broker authentication

Kafka → Consumer: TLS encrypted stream

Consumer → PostgreSQL: Standard JDBC connection (internal network)

Watch the network traffic:

# In a new terminal, monitor Kafka traffic

docker-compose logs -f kafka | grep SSL

You’ll see SSL handshake logs as services connect to Kafka.

Performance Comparison: Before and After TLS

Let’s document your results:

Day 12 (Compression Only)

Metric Value Throughput 1000 req/sec p99 Latency 25ms CPU Usage Baseline Network Bandwidth 5 MB/s

Day 13 (TLS + Compression)

Metric Value Throughput ~750 req/sec p99 Latency ~40ms CPU Usage +15% Network Bandwidth 5 MB/s (compression still effective)

Key Takeaway: The 25% throughput reduction is expected and acceptable. You’re now protected against network sniffing, man-in-the-middle attacks, and service impersonation. At scale, this security is essential.

Common Issues and Troubleshooting

Issue 1: “Certificate verification failed”

Cause: Truststore doesn’t contain the CA certificate

Fix:

cd certs

keytool -list -keystore truststore.jks -storepass changeit

Verify you see both root-ca and intermediate-ca entries.

Issue 2: Services can’t connect to Kafka

Cause: Kafka SSL configuration mismatch

Fix:

docker-compose logs kafka | grep -i “error”

Look for SSL-related errors. Ensure Kafka keystore and truststore are mounted correctly.

Issue 3: “Connection refused” errors

Cause: Services not using HTTPS

Fix: Verify you’re using https:// in URLs, not http://

Issue 4: Load test shows high failure rate

Cause: Certificate issues or service overload

Fix:

Check service health endpoints

Verify certificate expiration

Reduce concurrent connections in load test

Challenge Exercises

Now that your system is running, try these extensions:

Exercise 1: Certificate Rotation Simulation

Generate new certificates with a different validity period

Update one service’s keystore

Restart that service

Verify it can still communicate with other services (thanks to the shared truststore)

Exercise 2: Custom Metrics

Add a custom metric to track TLS handshake failures:

Add a Counter in your producer service

Increment it when SSL exceptions occur

Expose via Actuator

View in Grafana

Exercise 3: Multi-Region Setup

Create a second set of certificates for a “west” region

Start duplicate services on different ports

Configure them to talk to the same Kafka cluster

Observe how mTLS prevents cross-region impersonation

Next Steps: Day 14

Tomorrow, we’ll build a sophisticated load generator to:

Measure system saturation points under various load patterns

Identify performance bottlenecks in the TLS handshake pipeline

Calculate capacity planning metrics for production deployment

Validate horizontal scaling strategies with multiple consumer instances

Pre-work for Day 14

Keep your system running overnight (or stop and restart easily with Docker Compose)

Note the maximum throughput you achieved today

Identify any performance degradation patterns in Grafana

Think about how you’d scale this system to handle 10x more traffic

Cleanup (Optional)

When you’re done experimenting:

# Stop all services (Ctrl+C in each terminal)

# Stop and remove containers

docker-compose down

# To completely remove data volumes:

docker-compose down -v

Summary: What You Learned

Today you built a production-grade distributed system with:

Mutual TLS authentication protecting every connection

Automated certificate management with expiration monitoring

Performance benchmarking showing the cost of security

Monitoring and alerting for operational awareness

Industry patterns used by Netflix, Uber, and Google

The key insight: TLS isn’t just about encryption—it’s about trust. In a distributed system, you can’t trust the network. You must trust cryptographic identity. The 20-25% performance cost is acceptable because the alternative (network breaches) is catastrophic.

You now understand how to secure distributed systems at scale. Tomorrow, we’ll push this system to its limits and learn how to plan for production capacity.

Course Context: Day 13 of 254 | Module 1: Foundations of Log Processing | Week 1: Setting Up the Infrastructure

Keep building. Keep learning. Keep securing your systems.