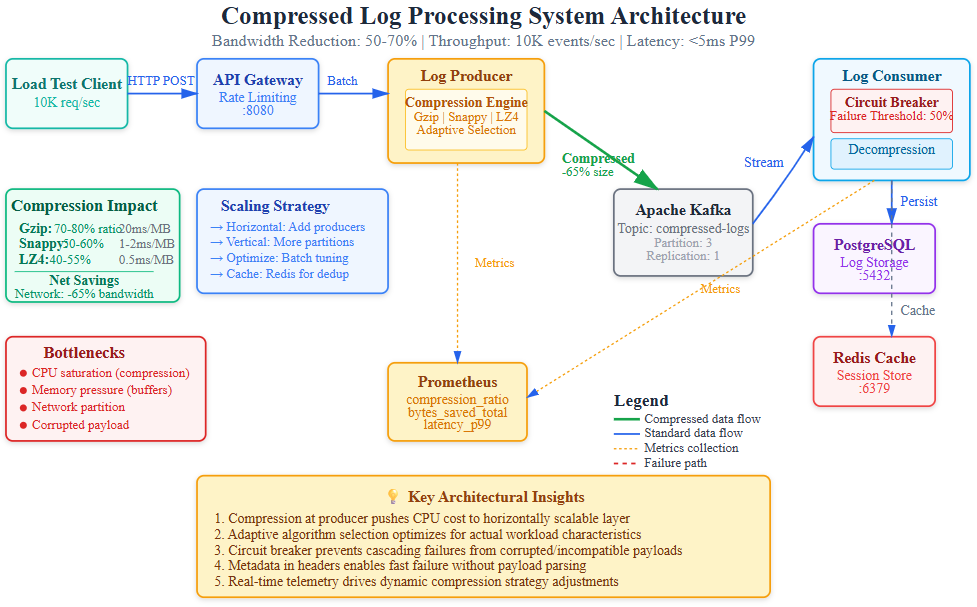

Day 12: Add Compression to Reduce Network Bandwidth Usage

What We’re Building Today

Today you’ll build a real production system that compresses log data before sending it over the network. This is the same technique that companies like Netflix and Uber use to save millions of dollars in bandwidth costs. You’ll create:

A pluggable compression engine supporting multiple algorithms (Gzip, Snappy, LZ4)

A bandwidth telemetry system measuring compression ratios and throughput gains

An adaptive compression strategy that selects algorithms based on payload characteristics

A performance profiling framework to benchmark CPU vs network trade-offs

Why This Matters

At scale, network bandwidth becomes a critical bottleneck. When Netflix streams billions of log events daily across regions, uncompressed transmission costs millions in cloud egress fees. Compression isn’t just about saving bytes—it’s about reducing latency through smaller payloads, improving throughput by packing more data per network round trip, and minimizing infrastructure costs.

The challenge: compression adds CPU overhead. Choose wrong, and you’ll burn expensive compute cycles for negligible gains. Choose right, and you’ll reduce network traffic by 70-90% while maintaining sub-millisecond latency. Today, we’ll implement a system that measures these trade-offs in real-time and adapts accordingly—the same approach Uber uses to optimize their real-time analytics pipeline.

System Design Deep Dive

The Compression Paradox: CPU vs Network Trade-offs

The fundamental tension in distributed log processing is resource allocation. Every byte transmitted consumes network capacity—but every byte compressed consumes CPU cycles. The optimal strategy depends on your bottleneck.

Compression Algorithm Characteristics:

Gzip: 70-80% compression ratio, 20-30ms CPU per MB, widespread compatibility. Best for batch processing where network is constrained and CPU is abundant.

Snappy: 50-60% compression ratio, 1-2ms CPU per MB, designed by Google for speed. Ideal for real-time streams where latency matters more than ratio.

LZ4: 40-55% compression ratio, 0.5-1ms CPU per MB, fastest decompression. Perfect for scenarios requiring immediate consumer processing.

Anti-pattern: Using Gzip for real-time event streams. We’ve seen production systems add 200ms P99 latency because engineers optimized for compression ratio without measuring end-to-end latency impact.